Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

How can Tensorflow be used with Estimators to optimize the model?

The model associated with titanic dataset can be optimized to give better performance after the specific columns are added. Once the columns are added, and trained, and the model is evaluated, the model will be trivially optimized, thereby giving better performance.

Read More: What is TensorFlow and how Keras work with TensorFlow to create Neural Networks?

We will use the Keras Sequential API, which is helpful in building a sequential model that is used to work with a plain stack of layers, where every layer has exactly one input tensor and one output tensor.

A neural network that contains at least one layer is known as a convolutional layer. We can use the Convolutional Neural Network to build learning model.

We are using the Google Colaboratory to run the below code. Google Colab or Colaboratory helps run Python code over the browser and requires zero configuration and free access to GPUs (Graphical Processing Units). Colaboratory has been built on top of Jupyter Notebook.

An Estimator is TensorFlow's high-level representation of a complete model. It is designed for easy scaling and asynchronous training. Estimators use feature columns to describe how the model would interpret the raw input features. An Estimator expects a vector of numeric inputs, and feature columns will help describe how the model should convert every feature in the dataset.

Example

print("The model is optmised to make predictions on a dataset")

pred_dicts = list(linear_est.predict(eval_input_fn))

probs = pd.Series([pred['probabilities'][1] for pred in pred_dicts])

print("The data is plotted")

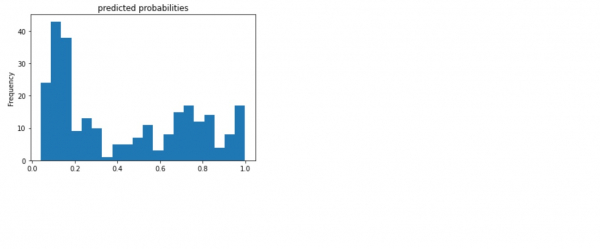

probs.plot(kind='hist', bins=20, title='predicted probabilities')

Code credit −https://www.tensorflow.org/tutorials/estimator/linear

Output

The model is optmised to make predictions on a dataset

INFO:tensorflow:Calling model_fn.

WARNING:tensorflow:Layer linear/linear_model is casting an input tensor from dtype float64 to the layer's dtype of float32, which is new behavior in TensorFlow 2. The layer has dtype float32 because its dtype defaults to floatx.

If you intended to run this layer in float32, you can safely ignore this warning. If in doubt, this warning is likely only an issue if you are porting a TensorFlow 1.X model to TensorFlow 2.

To change all layers to have dtype float64 by default, call `tf.keras.backend.set_floatx('float64')`. To change just this layer, pass dtype='float64' to the layer constructor. If you are the author of this layer, you can disable autocasting by passing autocast=False to the base Layer constructor.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmp/tmpg17o3o7e/model.ckpt-200

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

<matplotlib.axes._subplots.AxesSubplot at 0x7f8e2c1dd358>

The data is plotted

Explanation

An accuracy of 77.6% is reached, which is better than trained in base features.

More features and transformations can be applied to see if the model fares better.

The mode is trained to make predictions on a passenger from the evaluation set.

TensorFlow models are optimized to make predictions on batch, or collection of examples at once.