- GCA - Home

- GCA - Introduction

- GCA - Features

- GCA - How It Works?

- GCA - Getting Started

- GCA - Supported Languages

- GCA - Integration IDEs

- GCA - Best Prompts

- GCA - Code Customization

- GCA - Code Refactoring

- GCA - Collaborative Coding

- GCA for API Development

- GCA with Big Query

- GCA with Database

- GCA for Google Cloud

- GCA for Google Workspace

How Gemini Code Assist Works?

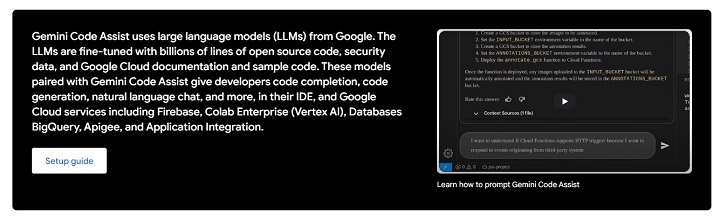

Googles Gemini Code Assist is transforming how developers create, manage, and maintain code with the power of AI and automation workflows. Its unique strength lies in its ability to process up to 1 million tokens, vastly outperforming competing models like GPT-4 Turbo and Claude 3. Whether you need help creating APIs, automating workflows, or making sense of complex datasets, Gemini Code Assist delivers efficiency through natural language-based prompts and intelligent recommendations.

In this chapter, we will see how Gemini works and the core architecture models which power its features.

How the Features Work Together?

Gemini Code Assist operates as a lightweight plugin that integrates with leading IDEs like Visual Studio Code, IntelliJ, and JetBrains tools. It relies upon machine learning models and context analysis to understand your project structure. Lets see what are the core architecture models which power Gemini.

Natural Language Processing (NLP) and Code Understanding

- Gemini uses large language models (LLMs) similar to those found in tools like GPT but fine-tuned for software development tasks.

- The model recognises code structure, syntax, and semantics across multiple languages.

- It applies contextual NLP to understand both code comments and natural language instructions, making it possible to suggest meaningful code snippets based on developer input.

- Example − If a developer types connect to PostgreSQL, Gemini provides a ready-to-use database connection snippet for Python or Node.js.

Fine-tuned Models for Specific Languages and Frameworks

- Gemini leverages fine-tuned AI models optimised for specific languages like Java, Python, and JavaScript.

- Specialised plugins handle popular frameworks (e.g., Flask, React, Spring), ensuring highly relevant recommendations.

- The system provides language-agnostic error detection, meaning it can spot logical errors across multiple languages.

Plugins and Microservices-based Architecture

- The tool follows a modular, microservices-based design, where individual components (e.g., syntax checking, debugging assistant) operate independently.

- This architecture enables on-demand updates to plugins without interrupting active coding sessions.

Real-time Model Inference and Lightweight Deployment

- Gemini runs inference models on edge devices or integrates with cloud-based inference engines, depending on the deployment.

- The tool ensures low-latency responses by processing suggestions locally whenever possible, while resource-intensive operations like code refactoring can be offloaded to the cloud.

Enterprise Context Integration

- Gemini Code Assist understands the concept of enterprise security schemas, API configurations, and policy patterns.

- This contextual intelligence ensures that the recommendations and code suggestions given by Gemini align with the organisations infrastructure.

- Example − A developer working on a CRM-API integration will receive specific suggestions based on security policies relevant to the companys CRM platform.

API Configurations

- Developers can generate API proxies through Apigee using plain language descriptions.

- If existing API objects are insufficient, Gemini will suggest ways to enhance or extend the API, reducing both development time and effort.

- Example − When adding authentication to an API, Gemini provides ready-to-use snippets and configuration guidance based on the policies available in Apigee.

Automated Maintenance

- With its proactive recommendations, Gemini Code Assist ensures that your automation flows remain efficient.

- It can suggest optimisations or identify errors during the integration process.

- Example − Gemini may recommend the removal of unnecessary data transformations from an automation pipeline, making workflows smoother.

The Architecture of Gemini Code Assist

The architecture of Gemini Code Assist reflects Googles advancements in multimodal AI models, capable of processing and generating outputs across various data types, including text, code, and images. Below is a breakdown of the core architectural components −

Multimodal Capabilities

Unlike many NLP models limited to text input, Gemini processes structured and unstructured data. This means developers can work with JSON files, code libraries, and even documentation to generate outputs efficiently.

Memory and Token Management

With the ability to process up to 1 million tokens, Gemini enables extended conversations and in-depth code analysis. This makes it suitable for large-scale codebases or automation flows requiring detailed documentation parsing.

Google DeepMind Collaboration

The architecture is in collaboration with DeepMinds expertise in reinforcement learning, ensuring that the model can improve with each interaction. Gemini also employs attention-based transformers for faster and more accurate contextual understanding.

Platforms Supporting Gemini Code Assist

Gemini Code Assist is deeply embedded within the Google Cloud ecosystem, making it available across various platforms that power enterprise workflows. Below are some of the primary services where Gemini can be accessed −

Apigee API Management

Apigee serves as a broker for APIs, and Gemini helps in designing, managing, and enhancing API proxies through natural language prompts. Developers can modify or iterate on existing APIs with minimal effort.

Application Integration

With Googles iPaaS solution, developers can create automation flows by specifying their needs in simple language. Gemini handles the technical implementation, generating variables and tasks automatically.

Cross-Platform Compatibility

Gemini Code Assist works across mobile devices, desktops, and cloud platforms, ensuring that developers can access it regardless of where they are working. This flexibility is essential for remote development teams that need consistent tools across different environments.

ML Model and Training Architecture

The ML model behind Gemini Code Assist is a result of extensive research by Googles DeepMind. Below are some of the technical aspects of the model and its evolution −

Model Variants

Each variant of Gemini is optimised for different workloads.

- Ultra − For enterprise-level automation and large datasets.

- Pro − For mid-tier applications with moderate data processing needs.

- Nano − Lightweight variant for mobile applications and edge devices.

Pre-Training and Fine-Tuning

- Gemini is pre-trained on a diverse set of datasets, including code repositories, API specifications, and documentation.

- Fine-tuning is performed based on specific enterprise needs, ensuring it aligns with the target use cases.

Transformer-Based Architecture

- The model uses a multi-layer transformer to capture long-range dependencies, enabling it to generate accurate outputs even for complex codebases.

- Gemini employs a transformer neural network architecture, similar to BERT or Codex, which excels in understanding sequential data.

- The system processes partial inputs (even incomplete lines of code) to predict the most relevant completions by analysing preceding and succeeding code blocks.

- The bidirectional encoding ensures that the tool learns not only from past input but also anticipates future requirements.

As Google continues to refine this tool, we can expect even more sophisticated features that will redefine enterprise development in the future.