Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Building an Amazon Keyword Research Tool with Python

Amazon keyword research is a critical step for sellers, marketers, and product teams who want to understand how customers discover products. Keywords influence product titles, descriptions, backend search terms, and advertising performance.

Manually researching keywords through the Amazon interface is time-consuming and limited. Results are personalized, location-dependent, making them difficult to analyze at scale.

In this tutorial, you'll learn how to build an Amazon keyword research tool using Python. We'll design a simple pipeline that retrieves Amazon search results, extracts product titles, and analyzes recurring keywords to identify common search terms.

Prerequisites

To follow this tutorial, you should have -

- Basic knowledge of Python (functions, lists, dictionaries)

- Python 3.7 or later installed

- Familiarity with JSON and APIs (helpful but not required)

- A general understanding of how Amazon search works

You'll also need access to a search engine data API that supports Amazon search results. This tutorial uses structured Amazon search data rather than raw HTML scraping.

What We're Building

By the end of this tutorial, you'll understand how to -

- Scrape data from Amazon search results

- Extract product titles from search listings

- Identify recurring keywords across listings

- Build a reusable keyword research pipeline

- Generate keyword frequency insights from Amazon data

This system can be used as a foundation for -

- Amazon SEO research tools

- Product discovery analysis

- Market research automation

- Keyword validation workflows

Why Manual Amazon Keyword Research Is Limited

Many sellers rely on manual browsing or autocomplete suggestions to find keywords. While this can work for small tasks, it has several limitations.

Common Challenges

- Personalized results - Search results vary by user, location, and browsing history

- Limited visibility - Only a small number of listings are visible at once

- No structured output - Manual methods do not provide data in analyzable formats

- Poor scalability - Researching multiple keywords quickly becomes impractical

Automating keyword research removes personalization noise and makes keyword data reproducible and comparable.

Choosing a Data Source for Amazon Keyword Search

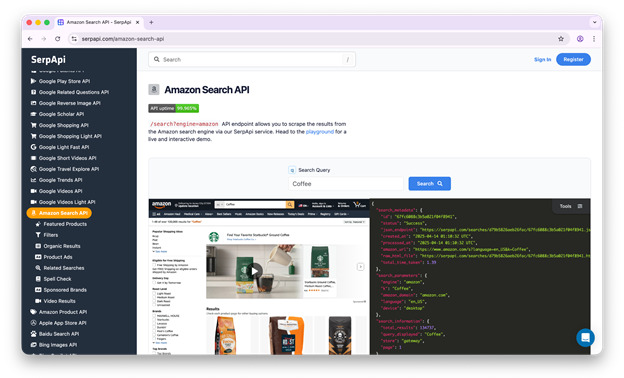

Instead of scraping Amazon product pages directly, this tutorial uses Amazon Search API data from [SerpApi].

The API returns structured JSON responses that include -

- Product titles

- Listing URLs

- Seller information

- Pricing data

Using structured search data avoids common scraping challenges such as CAPTCHA, HTML changes, and request blocking.

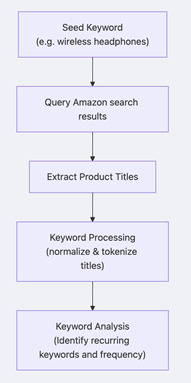

Architecture Overview

A typical Amazon keyword research system follows this workflow -

This architecture is simple, extensible, and well-suited for automation.

Project Structure

The following structure keeps the project easy to understand and extend -

amazon_keyword_research/ - tracker.py - analysis.py - .env - requirements.txt

File overview

- tracker.py - Fetches Amazon search results

- analysis.py - Processes and analyzes keywords

- .env - Stores private API keys value.

- requirements.txt - Project dependencies

Step 1: Installing Dependencies

Install the required Python package -

pip install google-search-results python-dotenv

- google-search-results - A Python client for SerpApi that retrieves structured search results from supported engines (such as Amazon Search, Bing, eBay, etc.) without building your own scraper.

- python-dotenv - Loads environment variables from a .env file, allowing sensitArchitecture Overviewive values such as API keys to be kept outside the source code.

Step 2: Configuration

Create .env file to store all your API credentials.

SERPAPI_API_KEY="your_api_key"

Step 3: Fetching Amazon Search Results

In tracker.py, add the following code -

import os

from serpapi import GoogleSearch

from dotenv import load_dotenv

load_dotenv()

SERPAPI_API_KEY = os.getenv("SERPAPI_API_KEY")

def fetch_amazon_search_results(query):

params = {

"engine": "amazon",

"k": query,

"api_key": SERPAPI_API_KEY

}

search = GoogleSearch(params)

results = search.get_dict()

return results.get("organic_results", [])

This function retrieves Amazon search results for a given keyword.

This Amazon Search API supports several optional parameters that allow you to refine and localize keyword research results. These parameters are useful when you want keyword data that reflects a specific market, shipping region or sorting preference.

For example, "Amazon_domain" by default, the API uses amazon.com. Other domains such as amazon.co.uk, amazon.de or amazon.jp allow you to perform Amazon keyword research for different regions.

For a complete list of supported parameters and examples, refer to the official [SerpApi's Amazon Search API documentation].

Step 4: Extracting Product Titles

Next, extract product titles from the search results -

def extract_titles(results):

return [

item.get("title")

for item in results

if item.get("title")

]

Product titles often contain the most important keywords sellers are targeting.

Step 5: Keyword Frequency Analysis

Amazon product titles often contain filler words, punctuation, and formatting variations. To produce cleaner keyword insights, we can apply basic text normalization before counting keyword frequency.

We use NLTK for tokenization and stop-word removal. This improves keyword quality while keeping the analysis easy to understand.

Installing NLTK

Install NLTK if it is not readily available -

pip install nltk

Create analysis.py to analyze recurring keywords -

import nltk

import string

from collections import Counter

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

# Download required NLTK data if not present

try:

nltk.data.find('tokenizers/punkt_tab')

except LookupError:

nltk.download('punkt_tab')

try:

nltk.data.find('corpora/stopwords')

except LookupError:

nltk.download('stopwords')

STOP_WORDS = set(stopwords.words("english"))

def analyze_keywords(titles, top_n=15):

words = []

for title in titles:

tokens = word_tokenize(title.lower())

cleaned = [

token.strip(string.punctuation)

for token in tokens

if token not in STOP_WORDS

and token.isalpha()

and len(token) > 2

]

words.extend(cleaned)

return Counter(words).most_common(top_n)

Step 6: Running the Keyword Research System

Update tracker.py to include an entry point -

from analysis import analyze_keywords

def main():

query = "wireless headphones"

results = fetch_amazon_search_results(query)

titles = extract_titles(results)

keywords = analyze_keywords(titles)

print("Top Amazon keywords:")

for word, count in keywords:

print(f"{word}: {count}")

if __name__ == "__main__":

main()

Run the script:

python tracker.py

Output

Top Amazon keywords: headphones: 24 wireless: 23 bluetooth: 18 black: 12 noise: 11 lightweight: 10 cancelling: 10 ear: 9 foldable: 9 headset: 9 bass: 9 battery: 9 life: 9 playtime: 8 jbl: 8

How This System Can Be Extended?

This basic Amazon keyword research system can be extended in several ways -

- Analyze multiple seed keywords

- Export keywords to CSV files

- Group keywords by product features

- Track keyword trends over time

- Integrate with SEO or advertising tools

The core architecture remains the same.

Conclusion

Amazon keyword research plays a crucial role in product visibility and discoverability. Manual methods are limited by personalization, scale, and lack of structure.

By using Amazon search data and Python, you can build automated keyword research systems that extract meaningful insights from Amazon listings.

Try It Yourself

If you'd like to experiment with this system using real Amazon search data, you can [sign up] for SerpApi and get access to the Amazon Search API.

SerpApi offers a free plan with up to 250 searches per month, which is sufficient for testing, prototyping, and small research projects. This allows you to run the examples in this tutorial and explore Amazon keyword research without setting up scraping infrastructure.

You can create a free account on the SerpApi website and obtain an API key to get started.

The complete source code for this tutorial is available in our [GitHub repository]