- A/B Testing - Home

- A/B Testing - Overview

- A/B Testing - How it Works

- A/B Testing - Why to Use?

- A/B Testing - What to Test?

- A/B Testing - Process

- A/B Testing - Collect Data

- A/B Testing - Identify Goals

- A/B Testing - Create Variations

- A/B Testing - Run Experiment

- A/B Testing - Analyze Results

- A/B Testing - Tools

- A/B Testing - Multivariate

- A/B Testing - SEO

- A/B Testing - Interview Questions

- A/B Testing Useful Resources

- A/B Testing - Quick Guide

- A/B Testing - Useful Resources

- A/B Testing - Discussion

A/B Testing - Interview Questions

A/B Testing (also known as Split testing) defines a way to compare two versions of an application or a web page that enables you to determine, which one performs better. A/B Testing is one of the easiest ways, where you can modify an application or a web page to create a new version and then comparing both these versions to find the conversion rate. This also lets us know, which is the better performer of the two.

The number of samples depend on the number of tests performed. The count of conversion rate is called a sample and process of collecting these samples is called sampling.

Confidence interval is called measurement of deviation from the average on the multiple number of samples. Let us assume that 22% of people prefer product A, with +/- 2% of confidence interval. This interval indicates the upper and lower limit of the people, who opt for Product A and is also called margin of error. For best results in this average survey, the margin of error should be as small as possible.

Always perform A/B Testing if there is probability to beat the original variation by >5%. Test should be run for considerable amount of time, so that you should have enough sample data to perform statistics and analysis. A/B Testing also enables you to gain maximum from your existing traffic on a webpage.

The cost of increasing your conversions is minimal as compared to the cost of setting up the traffic on your website. The ROI (return on investment) on A/B Testing is huge, as a few minor changes on a website can result in a significant increase of the conversion rate.

Like A/B Testing, Multivariate testing is based on the same mechanism, but it compares higher number of variables, and provides more information about how these variables behave. In A/B Testing, you split the traffic of a page between different versions of the design. Multivariate testing is used to measure the effectiveness of each design.

The problem with testing multiple variables at once is that it would be tough to accurately determine which of these variables have made the difference. While you can say one page performed better than the other, if there are three or four variables on each, you cant be certain as to why one of those variables is actually a detriment to the page, nor can you replicate the good elements on other pages.

Here are a few A/B Testing variations that can be applied on a web page. The list includes − Headlines, Sub headlines, Images, Texts, CTA text and button, Links, Badges, Media Mentions, Social mention, Sales promotions and offers, Price structure, Delivery options, Payment options, Site navigations and user interface.

Background Research − First step in A/B Testing is to find out the bounce rate on your website. This can be done with the help of any tool like Google Analytics.

Collect Data − Data from Google Analytics can help you to find visitor behaviors. It is always advisable to collect enough data from the site. Try to find the pages with low conversion rate or high drop-off rates that can be improved.

Set Business Goals − Next step is to set your conversion goals. Find the metrics that determines whether or not the variation is more successful than the original version.

Construct Hypothesis − Once the goal and metrics have been set for A/B Testing, next is to find ideas to improve the original version and how they will be better than the current version. Once you have a list of ideas, prioritize them in terms of expected impact and difficulty of implementation.

Create Variations/Hypothesis − There are many A/B Testing tools in the market that has a visual editor to make these changes effectively. The key decision to perform A/B Testing successfully is by selecting the correct tool.

Running the Variations − Present all variations of your website or an app to the visitors and their actions are monitored for each variation. Visitor interaction for each variation is measured and compared to determine how that variation performs.

Analyze Data − Once an experiment is completed, next is to analyze the results. A/B Testing tool will present the data from the experiment and will tell you the difference between how the different variations of web page is performed. Also if there is any significant difference between variations with the help of mathematical methods and statistics.

The most common type of data collection tools includes the Analytics tool, Replay tools, Survey tools, Chat and Email tools.

Replay tools are used to get better insight of user actions on your website. It also allows you to click maps and heat maps of user click and to check how far user is browsing on the website. Replay tools like Mouse Flow allows you to view a visitor's session in a way you are with the visitor.

Video replay tools give deeper insight into what it would be like for that visitor browsing the various pages on your website. The most commonly used tools are Mouse Flow and Crazyegg.

Survey tools are used to collect qualitative feedback from the website. This involves asking returning visitors some survey questions. The survey asks them general questions and also allows them to enter their views or select from pre-provided choices.

You can reduce the number of bounce rate by adding more images at the bottom. You can add links of social sites to further increase the conversion rate.

There are different types of variations that can be applied to an object like using bullets, changing numbering of the key elements, changing the font and color, etc. There are many A/B Testing tools in the market that has a visual editor to make these changes effectively. The key decision to perform A/B testing successfully is by selecting the correct tool.

Most commonly available tools are Visual Website Optimizer, Google Content Experiments and Optimizely.

Visual Website Optimizer or VWO enables you to test multiple versions of the same page. It also contains what you see is what you get (WYSIWYG) editor that enables you to make the changes and run tests without changing the HTML code of the page. You can update headlines, numbering of elements and run a test without making changes to IT resources.

To create variations in VWO for A/B Testing, open your webpage in WYSIWYG editor and you can apply many changes to any web page. These include Change Text, Change URL, Edit /Edit HTML, Rearrange and Move.

Visual Website Optimizer also provides an option of multivariate testing and contains other number of tools to perform behavioral targeting, heat maps, usability testing, etc.

These tests can be applicable on several other places like Email, Mobile Apps, PPC and CTAs as well.

Once an experiment is completed, next is to analyze the results. A/B Testing tool will present the data from the experiment and will tell you the difference between how the different variations of that web page are performed. It will also show if there is a significant difference between variations using mathematical methods and statistics.

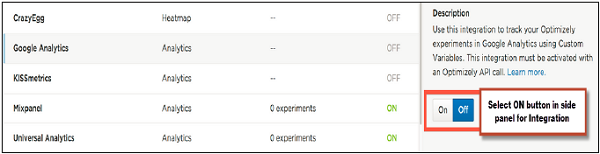

To integrate Optimizely to Universal Google Analytics, first select the ON button on the side panel. Then you must have an available Custom to populate with Optimizely experiment data.

The Universal Google Analytics tracking code must be placed at the bottom of the <head> section of your pages. Google Analytics integration will not function properly unless the Optimizely snippet is above the Analytics snippet.

Google Analytics has two options for analyzing the data, which are Universal Analytics and Classic Google Analytics. New Universal Analytics features allow you to use 20 concurrent A/B tests sending data to Google Analytics, however the Classic version allows only up to five.

This is a myth that A/B Testing hurts search engine rankings because it could be classified as duplicate content. The following four ways can be applied to ensure that you dont lose the potential SEO value, while running A/B Tests.

Dont Cloak − Cloaking is when you show one version of your webpage to Googlebot agent and other version to your website visitors.

Use rel=canonical − When you have A/B Tests with multiple URLs, you can add rel=canonical to the webpage to indicate to Google which URL you want to index. Google suggests to use canonical element and not noindex tag as it is more in line with its intention.

Use 302 redirects and not 301s − Google recommends to use the temporary direction method − a 302 over the permanent 301 redirect.

Dont run experiments for a longer period of time − Please note that when your A/B Test is completed, you should remove the variations as soon as possible and make changes to your webpage and start using the winning conversion.