Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

What Are Restricted Boltzmann Machines?

Introduction

The Restricted Boltzmann Machine, developed by Geoffrey Hinton in 1985, is indeed a network of symmetrically interconnected systems that functions like neurons and makes stochastic judgments. After the Netflix Competition, where RBM walt is a type of unsupervised utilized as an information retrieval strategy to forecast ratings and reviews for movies and outperform most of its competition, this deep learning model gained a lot of notoriety. It is helpful for collaborative filtering, feature learning, dimensionality reduction, regression, classification, and feature learning.

Let's understand what are restricted Boltzmann Machines in depth.

Restricted Boltzmann Machine

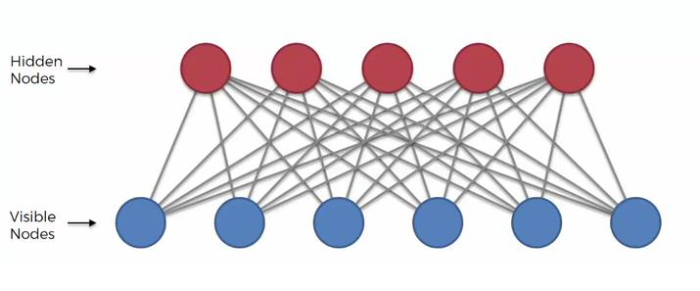

Restricted Boltzmann Machines have stochastic two-layered neural networks that can automatically identify underlying patterns in data by reconstructing input. They are a subset of energy-based models. They have two layers, one of which is hidden. The hidden layer is made up of nodes that create the visible layer and collect characteristic information from the data. They do not have any output nodes, which might appear weird, and they don't have the typical binary output that allows for the learning of patterns. They vary because learning cannot take place without that capacity. We don't concern about hidden nodes; we only care about input nodes.

RBM is utilized in numerous real-time commercial use cases, including.

Since it can be difficult to understand handwritten writing or a random pattern, RBM is used for feature extraction in pattern recognition applications.

Recommendation Engines: RBM is frequently used in information retrieval approaches to forecast the recommendations that should be made to a client in order for that client to enjoy utilizing a specific application or platform. For example, both books and movies come highly recommended.

Radar Target Recognition: In this situation, RBM is used to locate intra pulses in radar systems that have high noise levels and exceptionally low SNR.

Restricted Boltzmann Machine Features

Some key characteristics of the Boltzmann machine are

They employ symmetric and recurring structures.

RBMs aims to connect low-energy states with the highest probability ones as well as vice versa as part of their learning process.

The layers are not connected to one another.

It uses input data lacking labeled responses to generate inferences; this makes it an algorithm in unsupervised learning.

In this section, we will contrast a Boltzmann machine with a constrained Boltzmann machine. Each algorithm has two levels: an apparent level and a secret one. The Boltzmann Machine connects each individual neuron for each layer as well as every neuron inside the visible layer to every neuron in the hidden layer layer. However, RBM differs from previous examples of the Boltzmann machine in that the neurons in the layer are not connected. i.e. There really is no intra-layer communication, making each other independent and easier to implement as provisional freedom means that researchers do need to determine only negligible probability, which is easier to compute.

Operation Of RBM

How does Restricted Boltzmann Machine, an unsupervised learning method, learn without needing any output data, as was previously mentioned? A hidden layer neuron adds a bias value to input data received from a visible layer neuron, multiplies the result by some weights, and then output is produced. Then, the hidden layer neuron's output value becomes a new input, which is multiplied by the same weights, and the visible layer's bias is added to create the new input. Reconstruction nor backward pass are the two names for this procedure. The original input and the newly generated input will then be compared to see if they match or not.

Training In RBM

Gibbs Sampling & Contrastive Divergence are used to train RBM.

The prediction is p(h|v) if the input is depicted by v and hidden worth by h. P(v|h) is utilized for the prediction of regenerating input data when the hidden values are known. Let's imagine that after this procedure has been carried out k times, v k is obtained from input value v 0 at the end of k rounds.

to make an approximation of the gradient, which is a graphical slope depicting the link between such a network's weights as well as its error, Contrastive A crude Maximum-Likelihood learning strategy is divergence. It is used when we cannot directly evaluate a functional as well as set of probabilities and need to approximate the learning slope of the algorithm and determine what direction to go in.

RBM Applications

Incriminating evidence, workplace computerization, check verification, & data entry are examples of contemporary applications that require handwritten character recognition, which would be a common problem today. Inconsistent writing styles, size, and shape variations, as well as image noise that changes the topology of the numbers, are additional problems. In this, a hybrid RBM-CNN technique is used for digit recognition. First, the features are extracted using RBM deep learning techniques. The features that were obtained for categorization are then sent into the CNN deep learning system.

Conclusion

Restrictive Boltzmann Machines are two-layered unsupervised neural models which learn from the input distribution, to put it briefly. RBM as well as the algorithms employed for their learning and optimization have undergone several modifications and advancements. Contrastive divergence is used to train them, and after training, they can produce new samples from the training dataset. Infinite RBM and Fuzzy RBM are two examples of modifications that have been made to the original RBMs to improve their effectiveness and their representability.