- Stable Diffusion - Home

- Stable Diffusion - Overview

- Stable Diffusion - Getting Started

- Stable Diffusion - Architecture

- Stable Diffusion - Model Versions

- Stable Diffusion - XL

- Stable Diffusion 3 - Latest Model

- Stable Assistant

- Stable Fast 3D

- Stable Diffusion Vs Other Models

Stable Diffusion Useful Resources

Stable Diffusion - Quick Guide

Stable Diffusion - Overview

Stable diffusion enables the generation of high quality images from textual descriptions. It can be utilized to enhance many fields, like design, advertising, and visual storytelling. It helps users create compelling visual content within seconds.

How does Stable Diffusion Work?

Stable Diffusion generates images from textual descriptions through a process called Diffusion. This allows the model to generate high-quality, and photorealistic images. The diffusion process starts from a random noisy image. The model then removes the noise over multiple steps to create coherent images. The model tries to remove the noise in relation to the text to make sure that the image generated correlates with the textual description.

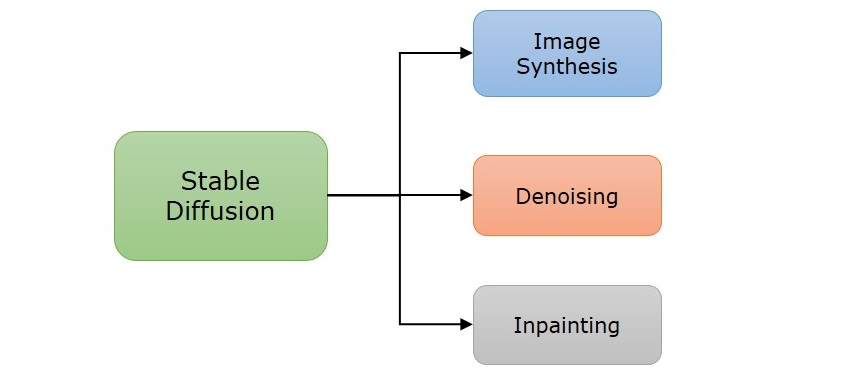

The model is based on Fractional Brownian Motion and Stable Levy motion, which helps in generating more stable, realistic, and relevant images. The model is particularly well-suited for Image synthesis, Denoising, and Inpainting for its ability to generate images with great detail and complexity.

How to Access Stable Diffusion?

The model can be accessed in quite a few means, depending on your requirements. Some common ways to access Stable Diffusion are −

- Access Stable Diffusion Online − If you want to run the tool immediately, you can run it online using tools like DreamStudio which grants users to access the latest version of Stable Diffusion and allows them to generate images in seconds. Another open-source platform that provides access to the latest stable diffusion model is Hugging Face, but takes relatively longer time to generate images.

- Install Stable Diffusion on Your Computer − Stable Diffusion allows users to access on their local computer. This enables you to experiment with various text inputs, tailor them using different artistic styles, and may also allow you to fine-tune the model to improve the results.

Use Cases of Stable Diffusion

Stable Diffusion can be widely used for many practical applications across various industries including −

- Digital Media − The model can be used for to generate sketches, concepts and illustrations. Media can also cut costs in content generation for covers and designing.

- Product Design − Companies can use this tool to design their products and view them visually. Fashion designers and architects also use this model to visualize their idea to the clients.

- Marketing and Advertising − Ad agencies and companies can use Stable Diffusion to design promotions and posters for campaigning their product. The AI-generated images cut on expenses and provide unlimited on-brand content.

- Science and Medicine − Researchers provide details of chemical compounds and molecules to visualize data patterns. This can help them discover new insights.

- Education − Teachers and instructors can use this tool to visualize the concept, this can be interactive and help the students understand the concept effortlessly.

Limitations of Stable Diffusion

Though Stable Diffusion displays exceptional image generating capabilities, there are few limitations too, like −

- Image Quality − The model is not strictly limited to a single input or output resolution.

- Bias − The images generated sometimes show biases since the model lacks diversity in the training data.

- Contextual Understanding − Sometimes, if the prompt is too complex, the model understands the text but doesn't truly understand the context. This may lead to irrelevant image generation.

Future of Stable Diffusion

The future of Stable Diffusion and generative AI models looks promising. The goal of Stability AI is to set a new standard for creativity in generative AI. The company also continuously focuses on improving the medium based on user feedback, feature expansion, and enhancing its performance.

Getting Started with Stable Diffusion

Getting started with Stable Diffusion is easy. You can use the Stable diffusion model online through DreamStudio web interface. You can also set it up locally. Hugging Face also provides an online platform to access the stable diffusion models.

You can choose online platforms like DreamStudio or Hugging Face for an easy start without hardware setup. Setting up locally is a good idea if you want more control over the functionalities.

Access Stable Diffusion using DreamStudio

If you wish to start using the Stable Diffusion model immediately, you can use it online using the DreamStudio tool, which is a web interface designed by Stability AI to help users access the latest models released in image editing and generating, video generation and 3D modeling.

To get started, click here to open the interface online. Once the console is opened, you will find "Try DreamStudio for free," which you have to click to start generating.

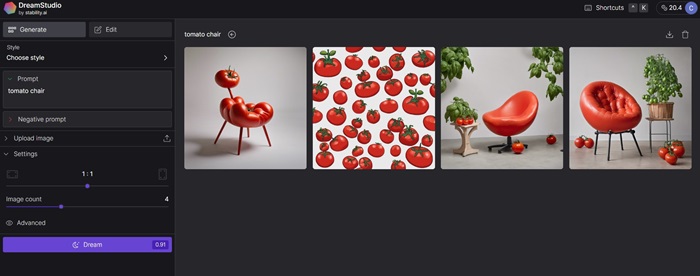

On the left of the screen, you will find a designated column to enter the prompt and generate images. DreamStudio would generate four images simultaneously in 15 seconds giving you options to choose an image that is closely aligning with your prompt. Additionally, the model learns what type of images are mostly preferred.

New users receive 100 free credits to try DreamStudio which is enough to generate around 500 images. Additional credits can be purchased based on your convenience.

Run Stable Diffusion Locally

The latest model that users can access locally is Stable Diffusion XL 1.0. Further is the detailed process of running SDXL locally with ComfyUI.

System Requirements

The most important features you have to check before setting up Stable Diffusion locally are −

- PC with Windows/Linux Installed

- Graphical Processing Unit - Nvidia

Setting up Stable Diffusion Locally

Setting up and running Stable Diffusion locally on your computer will let you explore and experiment with different inputs and will also allow you to fine-tune the model.

Step 1: Install Python and Git

To run Stable Diffusion, you require Python 3.10.6 than can be downloaded from the official website. Next, you must install code repository management Git.

Step 2: Create A Hugging Face and GitHub Account

This helps you download Stable Diffusion WebUI from Github.

Step 3: Clone Stable Diffusion Web UI

Open Git Bash and use the command cd to navigate to the desired folder where you want the clone of Stable Diffusion Web UI. Run the following command to close the repository −

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui

If the command is executed successfully, you will find a folder named stable-diffusion-webui in your chosen folder.

Step 4: Download the Latest Model of Stable Diffusion

You can download the latest model through HuggingFace.

Step 5: Setup

On the Command Prompt, use cd to navigate to the folder you earlier cloned. Once you are in the folder run the following command −

webui-user.bat

Step 6: Run Stable Diffusion Locally

After running the amount command, all the required dependencies, a URL will appear in the prompt, which you have to copy and paste in your web browser to access Stable Diffusion.

Stable Diffusion - Architecture

Large text-to-image models have achieved remarkable success enabling high quality synthesis of images form text prompts. Stable Diffusion is one among them for image generation. It is based on a type of diffusion model called Latent Diffusion Model, created by CompVis, LMU and RunwayML.

This new diffusion model reduces the use of memory and computation time by applying the diffusion process over a less dimensional latent space, rather than the actual high dimensional image space.

The three main component in the Stable Diffusion are −

- Variational Autoencoder (VAE)

- U-Net

- Text-Encoder

Variational Autoencoder (VAE)

Variational Autoencoder (VAE) has two parts: an encoder and a decoder. During the training, the encoder converts an image into a low dimensional latent representation for the forward diffusion process, i.e. the process of turning an image into noise. These small encoded versions are called latents, to which noise is applied repeatedly in each step training which is fed as input for the U-Net model.

The decoder of VAE is used to transform the low dimensional representation back into an image. The denoised latents generated by the reverse diffusion process, i.e., the process of converting noise into image which is done using the decoder.

U-Net

U-Net is a convolutional neural network that predicts denoised image representation of noisy latent. The input for U-Net is the noisy latents, and the output of UNet is noise in the latents. This step is especially carried out to get the actual latents by removing the noise for the noisy latents.

The architecture of U-Net in this model consists of an encoder with 12 blocks followed by a middle block, and then a decoder with 12 blocks. Among these 25 blocks, 8 of them are either for down sampling or up sampling convolution layers and the rest are the main blocks which consist 4 resNet layers and two Vision Transformers(ViTs).

Text Encoder

A text encoder is a simple transformer-based model that transforms a sequence of input tokens into a series of latent text embeddings. Stable Diffusion applied the pre-trained CLIP text encoder, which generated embeddings that correspond to the given input text. The embedding space is further used as input for U-Net, also provides guidance for denoising noisy latents during the U-Net's training process.

Stable Diffusion - Model Versions

The Stability Diffusion model has undergone significant improvement since its release, with each version building up the lessons from the previous version. This chapter compares the functionality between the versions of stable diffusion.

Stable Diffusion 1.x

The first generation of Stable Diffusion models, known as the 1.x series, includes 1.1, 1.2, 1.3, 1.4, and 1.5 versions. They are capable enough to generate a wide range of styles and require a limited amount of computational power and resources.

Stable Diffusion 2.x

The 2.x series includes 2.0 and 2.1. This series has been developed to create high-resolution images, along with the ability to interpret expressive and complex prompts.

Stable Diffusion XL 1.0

Stable Diffusion XL 1.0 is the most used open-source version that creates high-resolution images with improved color grading and composition. Also, this version can understand complex prompts and concepts.

Stable Diffusion XL Turbo (SDXL Turbo) is the extension of SDXL 1.0 that is developed for rapid generation of images in a single step.

Stable Diffusion 3

Stable Diffusion 3 is the latest version announced by Stability AI in March 2024, with improved performance in features like interpreting prompts, image quality and resolution, and spelling abilities. The model is still in its preview stage and still not available to the public.

Comparing Stable Diffusion Models

The following table summarizes the features and improvements across the versions of Stable Diffusion −

| Features | SD 1.5 | SD 2.0 | SD 2.1 | SD XL 1.0 |

|---|---|---|---|---|

| Release Date | October 2022 | November 2022 | December 2022 | July 2023 |

| Resolution | 512x512 | 768x768 | 768x768 | 1024x1024 |

| Prompt Technology | OpenAI's CLIP Vit-L/14 | LAION's OpenCLIP-ViT/H | LAION's OpenCLIP-ViT/H | OpenCLIP-ViT/G and CLIP-ViT/L |

| Strength | Beginner friendly, better performance on landscape and architectural subjects | Improved handling and interpretation of complex prompts, better image resolution | Improved conceptual understanding, better color grading, and image quality | Better portraits, high resolution and image quality, shorted prompts |

| Limitations | Poor prompt interpretation | More restrictive in generations, NSFW filtering | More "censored," especially with generating celebrities and art styles. | Requires computational resources to run locally |

Stable Diffusion XL

Stable Diffusion XL 1.0 is the significant advancement in the evolution of text-to-image generation models. This version is the flagship model of Stability AI, improved to be the world's best image generation model that is succeeded by the limited, research-only release of SDXL 0.9. This chapter explores the features, ways to access, and limitations of Stable Diffusion XL (SDXL) 1.0.

Features of Stable Diffusion XL

As per reports, when Stability AI tested SDXL 1.0 against various other models, the results were conclusive that people preferred this model compared to other versions. Some key features that the version offers are −

- Contextual Understanding − One of the significant improvements is the ability of the model to understand and interpret complex prompts.

- Legible Text − The model also focuses on generating accurate legible text, i.e., the text on the images.

- Better Portraits − While the previous models had the problem of generating human portraits and anatomy. This model fixes the issue to an extent by generating better quality.

- Artistic Styles − Stable Diffusion XL offers various artistic styles for image generation, such as anime, digital art, cinematic, 3D Model, etc.

- Prompts − You no longer need to provide lengthy prompts to get desired results, SDXL understands short prompts much better than previous models.

- Open-source and Color Composition − The reason why SDXL is the most used model among all the versions of Stable Diffusion is that it is open-source and also designed to generate high quality images along with better color grading and composition.

How to Access Stable Diffusion XL?

There are many ways to get hands on the SDXL model. The four main ways to access and use Stable Diffusion XL are −

Accessing Stable Diffusion XL 1.0 Online

Clipdrop is one of the easiest ways to access Stable Diffusion XL for free. Once you navigate to their official website, you can type your prompt or choose from pre-written examples and generate an image.

Accessing Stable Diffusion XL 1.0 using Discord

Another easiest way to generate images is by accessing it through Discord. Once you start using, visit one of the #bot-1 - #bot-10 channels, and you will find the following command to enter the prompt "/dream prompt: *enter prompt here*. Once you enter your prompt, the bot will generate two images, this gives you an option to choose the better one and also helps to train the model.

Accessing Stable Diffusion XL 1.0 using Hugging Face

The model is currently available for download on Hugging Face. Click here to download SDXL 1.0 base model.

Stable Diffusion XL Turbo

The next enhanced version of SDXL is Stable Diffusion XL Turbo developed with new distillation technology called Adversarial Diffusion Distillation (ADD), which allows the model to synthesize images in a single step.

You can also access this model by downloading the model weights and code on Hugging Face or by visiting Clipdrop which is Stability AI's image editing platform.

Limitations of Stable Diffusion XL

The model has some limitations such as −

- It cannot generate perfect photorealism.

- It struggles to generate tasks with complex prompts.

- It also has difficulties in generating portraits and people.

- It is not very accurate in generating legible text, but better than the previous models.

- There might be a loss of information during the encoding process since the auto-encoding part of the model is lossy.

What is Stable Diffusion 3?

Stable Diffusion 3 is Stability AI's most capable text-to-image model with greatly improved performance in aspects like prompts, image quality, and spelling abilities. Though the model is not yet available to the public, the chapter discusses the significant improvements in comparison with the previous models.

What is Stable Diffusion 3?

Stable Diffusion 3 is the latest version previewed by Stability AI in February 2024, which is an extension to the existing Stable Diffusion models. This most capable text-to-image model has been subjected to many improvements namely in the multi-subject prompts, image quality, and spelling abilities.

How to Access Stable Diffusion 3?

As of now, the model is currently not available to the public. They have opened the waitlist for an early preview, which you can register using the link here. This preview phase was initiated to gather insights on the performance and safety before its release to the public.

How does Stable Diffusion 3 Model Work?

This latest version provides the users with variety of options for scalability and quality to meet their creative needs. Stable Diffusion 3 is a combination of Diffusion transformer architecture and flow matching, which are further discussed in brief below −

Diffusion Transformer Architecture

Stable Diffusion 3 employs the diffusion model, which refines noise into clear images in multiple steps. Additionally, the version also employs transformer architecture which consists of encoder-decoder structure where the encoder converts text prompts into embedding which is then converted to image using the decoder.

Flow Matching

This is a technique that helps to create better images by improving how it transforms noise into detailed pictures. Instead on just focusing on the reverse process of adding noise to an image, this technique teaches the model to understand and replicate the patterns in the real image.

Benefits of Stable Diffusion 3

Some of the key benefits that Stable Diffusion 3 offers are −

- Better Image Quality − Stable Diffusion 3 offers enhanced image quality, making images realistic and surreal.

- Contextual Understanding − The model also enhanced its understanding and interpretation skills, which helps in the accurate analysis of the prompt.

- Hardware Compatibility and Accessibility − The model can be accessed across any hardware setup.

Stable Diffusion Pricing

Stable Diffusion 3 is free for personal and non-commercial purposes. However, if you want to use it for commercial purposes, you have to purchase the license. The price of the license depends on the specific use case. To learn further about the membership visit their website.

Stable Assistant

Stable Assistant is a chatbot developed by Stability AI, which allows the users to access the latest text and image generating technologies featuring Stable Diffusion, Stable Video, Stable Audio, Stable Image Services and Stable 3D.

Whether you're generating high-quality audio or want to transform images into videos or generate detailed images, Stable Diffusion is the tool you need to bring your vision to life.

What is Stable Assistant?

Stable Assistant is a conversational chatbot created by Stability AI as an interface for users to access its models like Stable diffusion XL, Stable Video diffusion, Stable audio, and Stable 3D.

This assistant excels in generating images from conversational prompts, which helps the users enhance their content in writing projects with complementary matching images.

Features of Stable Assistant

Some of the key functionalities offered by Stable Assistant are −

- Image Generation − The chatbot offers image generation tools powered by Stable Image Ultra. The model excels at creating photo realistic images and achieving creative output with high-quality.

- Audio Generation − The chatbot also allows the users to generate songs and audio up to three minutes, with various compositions like into, development, and outro, as well as stereo sound effects using the Stable Audio 2.0.

- Video Generation − The chatbot can generate short clips using Stable Video Diffusion.

- 3D Generation − The chatbot can generate high-quality 3D assets from a single image, which is used for prototyping a product, gaming, and virtual reality in fields like architecture and design that are powered by Stable Fast 3D.

Getting Started with Stable Assistant

You can ask Stable Assistant to generate text, images, videos, audio, and 3D inputs by providing textual prompts. Once you provide the prompt, press "enter."You can use the "settings" panel to select the image aspect ratio. Additionally, you can provide images instead of text to edit them and turn them into videos or 3D assets.

Inside the tool box

You can use the toolbox icon to edit and regenerate images. Some of its options include −

- Select and Replace − You can ask the Stable Assistant to replace a portion of the image that is selected.

- Erase − Select portions in an image to remove them.

- Inpaint − Draw on a portion of the image and type a prompt, based on which the selected area is replaced.

- Image to 3D − Stable Assistant allows you to convert 2D images into 3D assets.

- New Image with Same Style − Generate a new image in the same style as the prompted image.

- New Image with Same Structure − Generate a new image with minimal changes, maintaining the structure of the input images using the prompt.

- Zoom Out − You can extend the dimension of the images without losing clarity and quality.

- Remove Background − The background of the input image can be removed.

- Enhance and Upscale − You can input low-quality images and use Stable Assistant to generate 4K resolution.

- Sketch to Image − Upload a sketch and a text prompt to generate a detailed image.

Pricing for Stable Assistant

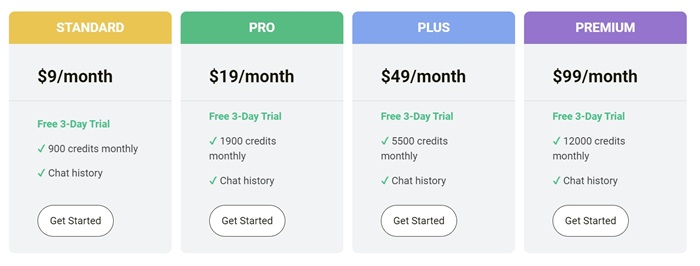

Stable Assistant offers four plans, choose the right plan based on your requirements. Your plan will be billed automatically once the free trial has ended.

You will be deducted credits for each usage, for example, for the generation of each image, 6.5 credits are deducted, and for each message sent to the assistant, 0.1 credit is deducted. Additionally, you can cancel your subscription or change the plan anytime you want to.

Stable Fast 3D

What is Stable Fast 3D?

Stable Fast 3D is a model developed by Stability AI to enhance the efficiency and stability of 3D modeling workflows. The model is designed by combining algorithms and machine learning techniques to produce high-quality 3D assets faster and with great precision. It can seamlessly be integrated with any existing software ecosystem.

Features of Stable Fast 3D?

Stable Fast 3D model is a tool that enhances 3D modeling workflows. It allows users to produce high-quality 3D assets faster and with great precision. Some factors that makes it the best option for 3D modeling are −

- Enhanced Speed and efficiency

- Advanced error-checking and resource management

- Improved quality and precision

- Open-source and user-friendly interface

How to Access Stable Fast 3D?

Stable Fast 3D is available on Hugging Face, Stable Assistant chatbot, Stability AI API and is released under stability AI Community License.

Additionally, the code is available on GitHub, and model weights and demo space on Hugging Face. Stability AI allows free non-commercial use and a paid subscription for commercial use.

How does Stable Fast 3D Work?

Stable Fast 3D works on Mesh Reconstruction with UV-unwrapping and Illumination Disentanglement. Some key features that are considered to enhance this model are −

- Bake-in illumination as it makes the asset difficult to relight

- Vertex color and UV unwrapping as it is slow and does not produce sharp textures.

- Marching cubes create shading artifacts which are replaced by Mesh extraction.

- The previous models suffered to predict material parameters.

The method based on which the model Stable Fast 3D is developed is the Large Reconstruction Model (LRM). The internal working starts as the image is passed through a DINOv2 encoder in order to get image tokens. Additionally, they train a large transformer conditioned on these image tokens to predict a trip lane volumetric representation. They add the predicted vertex offsets to produce a smoother, more accurate mesh. The latest model replaced the initial differential volumetric rendering with the mesh render and mesh representation.

Applications of Stable Fast 3D

Some main applications of Stable Fast 3D are −

- Gaming − Game designers and developers can use this model to create intricate worlds and characters to enhance the engaging experience.

- Film and Animation − Filmmakers can use the model to produce visual effects and animations, enhancing storytelling.

- Architects − The model can used for architectural visualization, to present the visual models to clients, making it easy for decision making.

- Education and Training − The tools can also be used by tutors and instructors to develop interactive visuals enhancing the learning experience for the students

Stable Diffusion Vs. Other Models

There are a lot of tools and models coming up each day in the generative AI field. It gets really hard to distinguish among these and choose the right one. This chapter compares different image-generating tools based on various capabilities.

AI Image-Generating Models

Before we compare image-generating models, let's understand the working and types of machine learning models used.

Diffusion Models

Diffusion models are trained on image-caption pair datasets. After the process of training, the model learns to understand and interpret the text prompt provided by the user, creates a low-resolution image, and gradually adds details to turn it into a full image - the attributes provided in the prompt with high resolution.

Latent Diffusion Model is an improvement on diffusion modeling in latent space. This model consists of an encoder where the prompt is interpreted which is then converted into compressed version called latent space. The next step would be diffusion process which involved adding noise. The last component would be decoder which reconstructs the image.

Generative Adversarial Networks (GANs)

In this approach, two neural networks are combined against each other. One network being the Generator, which is responsible for creating images. And the other network is the Discriminator which is used to determine whether the created image is real or fake.

Transformer Models

Transformers are designed by Google to improve natural language processing, speech recognition, and text autocompletion. This model is responsible for understanding and interpreting the meaning of the prompt to transform data points into visual representations.

AI Image-Generating Tools

There are many text-to-image generating tools available in the market. These tools use one or more image generating machine learning models that we have discussed above.

Let's have a look at some popular text-to-image generating tools −

DALL-E

DALL-E is a text-to-image model developed by OpenAI. It has unique capabilities to generate images using the natural language as prompts. The latest model DALL-E 3 was released in October 2023. DALL-E 3 can be accessed through ChatGPT.

Midjourney

Midjourney is a generative Artificial Intelligence tool that generates images from natural language description. It takes prompts similar to OpenAI's DALL-E and Stability AI's Stable Diffusion.

Adobe Firefly

Adobe Firefly is a family of generative AI models that power features in Adobe Photoshop.

Stable Diffusion vs. DALL-E vs. Midjourney

The table below compares Stable Diffusion with other text-to-image generating tools based on a few features −

| Features | Stable Diffusion | DALL-E | Adobe Firefly | Midjourney |

|---|---|---|---|---|

| Developer | Stability AI | OpenAI | Adobe Firefly | Midjourney |

| Release Date | August 2022 | January 2021 | 2023 | July 2022 |

| Model type | Latent Diffusion Model | Transformer Based Model | Autoencoder and GAN's | Diffusion Model |

| Access options | Dream studio, Hugging face, locally, Google Colab, and API | ChatGPT interface and API | Adobe apps, Firefly web app, Photoshop, InDesign and API | Bot on Discord |

| Image Quality | The default size set is 512 x 512, however it varies with model or version | The three sizes include 1024x1024, 1024x1729 and 1729x1024 | Maximum resolution is 2000x2000 | 1024 x 1024 pixel image |

| Pricing | Free access to personal and non-commercial purposes. Requires a license for commercial purposes. | Open-source | It is free with 25 generative credits per month. | Subscription-based |

| Strengths | Flexibility, ability to customize and open-source | Creative and high-quality images | Integration with Adobe tools makes it easy to access and high image quality. | Features and artistic styles |