Pytorch Lightning Resources

Pytorch - Quick Guide

PyTorch Lightning - PyTorch Introduction

PyTorch Lightning, an open-source library developed on top of PyTorch, is designed to simplify the process of creating and training machine learning models.It takes away much of the boilerplate code that is needed to run a training loop, managing the logging and device placement, so that you can concentrate on the core aspects of their models and experiments.

PyTorch was initially introduced in 2017 by Facebook's AI Research Lab(FAIR). As the field of data science advancement, the team developed a high-level framework called PyTorch Lightningin (2019).

PyTorch Lightning is especially designed for researchers who want to take their existing models and scale it to use multiple GPUs or even multiple nodes with a minimal amount of changes required in their code.

While working with these frameworks, PyTorch offers low-level control to simplify the process of training and building deep learning models. For instance, PyTorch Lightning allows users to define models, optimizers and data loaders as separate components.

PyTorch Lightning offers an interactive API with various features for training Neural Network including GPU acceleration, Batch Processing, and Automatic Optimization. It also provides a range of such components as − built-in metrics, optimizers, and schedulers.

Overview of PyTorch Lightning

PyTorch Lightning is a more structured way of writing PyTorch code making it less cluttered and easier to read. It splits the engineering part of training from the machine learning algorithm so that it is easy to test and replicate.

Lightning controls the training loop, validation loop and the testing loop and works seamlessly with other tools and libraries.

Using PyTorch Lightning, the data loading, logging, check-pointing, and distributed training can be achieved just by using a few lines of code.

Historical Background and Evolution

PyTorch Lightning was created to address the growing complexity in managing PyTorch training scripts and the need for more standardized and scalable training workflows.

- 2019: PyTorch Lightning was introduced by William Falcon as a way to simplify training loops in PyTorch.

- 2020: It gained popularity in the research community for its ease of use and powerful features.

- 2021: PyTorch Lightning became a widely adopted tool in both academia and industry, with continuous updates and enhancements driven by a growing community.

Components of PyTorch Lightning

Here is a detailed breakdown of each component −

Metrics are used to evaluate model performance during the training and testing phases.

- Accuracy: This refers to the proportion of correctly predicted positive values out of all the positive predictions.

- Precision: It measures the harmonic mean of precision and recall.

- Recall: This refers to the proportion of actual positive instances that are correctly identified.

- F1 Score: This is defined as the harmonic mean of recall and precision.

Application of PyTorch Lightning

Following is the list of application that utilize the PyTorch Lightning −

- Audio Processing: This application includes speech recognition, music generation and audio classification.

- Reinforcement Learning: This is an ML algorithm commonly used by developers to achieve satisfactory result predictions.

- GAN's: GAN stands for Generative Adversarial Networks that helps to generate the realistic images, videos and 3D Models.

- NLP: NLP stands for Natural Language Processing which identifies the text classification, sentimental analysis and more. For example- GPT uses the framework of PyTorch Lightning.

- Computer Vision: In this application, the PyTorch Lightning performs the multiple tasks such as image classification, generating image, and object detection.

Advantages of using PyTorch Lightning

Following are the advantages of PyTorch Lightning −

- It simplifies ML projects, making them easier to maintain and manageable.

- PyTorch Lightning eliminates around 80% of repetitive code, enhancing delivery value rather than focusing on engineering tasks.

- Users can easily experiment with multiple models, hyper-parameters, and techniques.

- It allows users to scale the modeling for larger datasets.

- It scales the training process to numerous GPUs and machines.

- This framework includes automatic mixed precision, which can significantly speed up the training process.

Supported Features and Integrations

PyTorch Lightning supports various features and integrations, making it a comprehensive tool for modern machine learning workflows −

- Multi-GPU and Multi-Node Training: Easily scale your models across multiple GPUs and nodes.

- TPU Support: Train your models on TPUs for faster computation.

- Integration with Logging Tools: Use TensorBoard, Comet, WandB, and other tools for detailed experiment tracking.

- Distributed Training: Lightning provides seamless support for distributed training, allowing you to train large models efficiently.

- Rich Callbacks: Use and customize callbacks for early stopping, check-pointing, and more.

Conclusion

PyTorch Lightning comes as the top solution for making the process of development and training of models in the field of machine learning easier by isolating engineering issues.

Its benefits for usage, extendibility and flexibility make it suitable for usage by researchers and developers.

Whether you are working on cutting-edge research or deploying production-level systems, PyTorch Lightning can help you streamline your workflow and focus on what matters most: your model, your model's assumptions, your

PyTorch Lightning - Environment Setup

How to Install PyTorch Lightning

PyTorch Lightning simplifies the deep learning workflow, making it easier to build and train models. By following the installation steps for various platforms and tools, you can quickly set up PyTorch Lightning and start utilizing the capabilities of PyTorch Lightning to enhance your machine learning projects.

Here, we have some popular tools to run the code of Pytorch Lightning.

Below are the instructions for installing PyTorch Lightning on various platforms and tools.

Google Colab

Google Colab is a free, web-based platform that provides GPU acceleration, ideal for running PyTorch Lightning.

Following are the basic steps to install PyTorch Lightning on Google Colab −

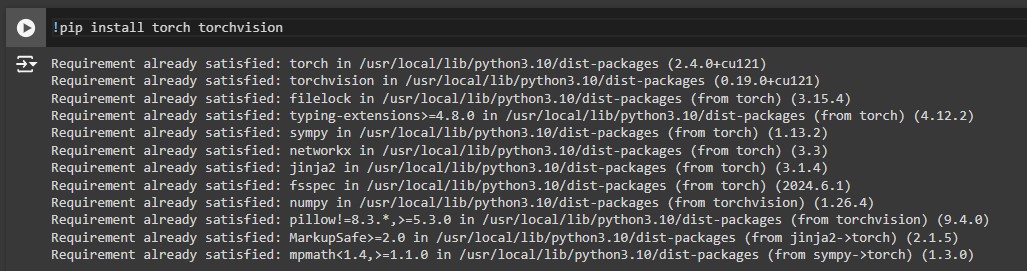

Step 1: Install PyTorch

Run the following command −

!pip install torch torchvision

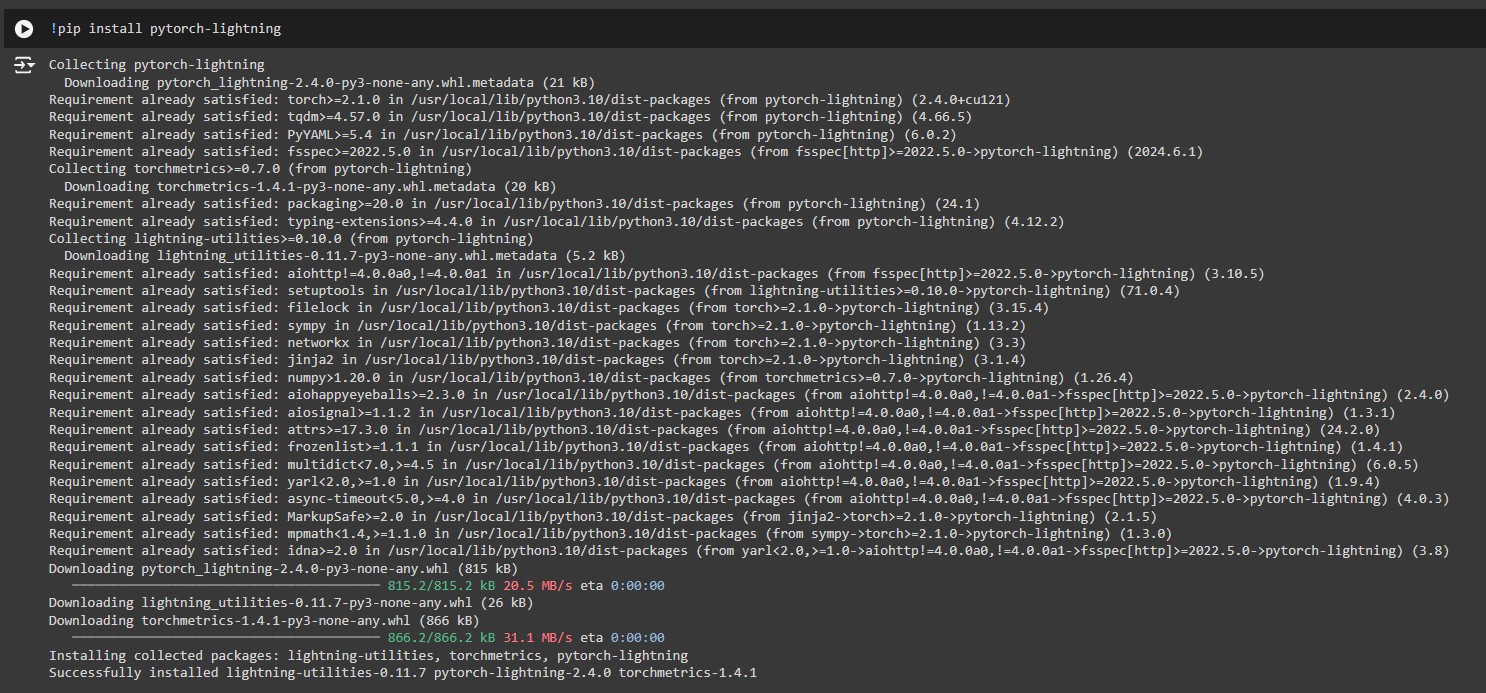

Step 2: Install PyTorch Lightning

Run the following command −

!pip install pytorch-lightning

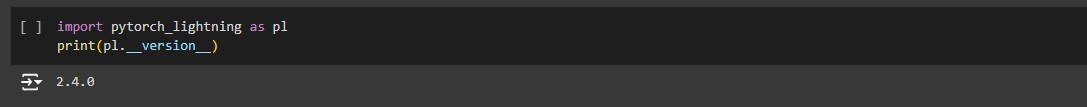

Step 3: Verify the installation

Import PyTorch Lightning and print the version.

Import pytorch_lightning as pl print(pl.__version__)

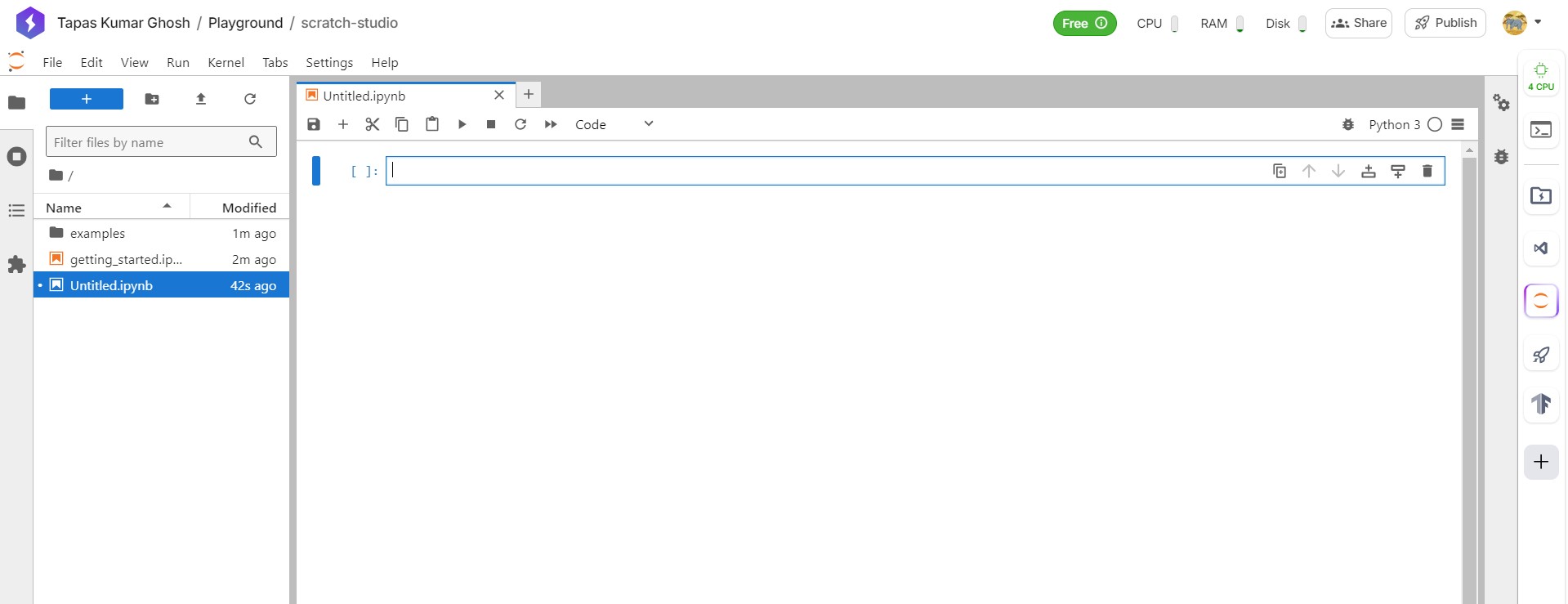

PyTorch Lightning Studio

PyTorch Lightning Studio is an open-source platform designed to enhance the management and monitoring of machine learning experiments. It provides a user-friendly interface for managing your PyTorch Lightning projects, tracking experiments, and collaborating with team members.

This platform is particularly useful for researchers and developers who need an organized environment for managing complex experiments and workflows.

Here are the steps to get started with PyTorch Lightning Studio −

Step 1: Navigate to PyTorch Lightning Studio

Start navigating to the option of "stdios" and then press "Quick Start". While registering the account on pytorch lightning, it takes one day to verify your details, which you can access.

Step 2: Launch the Studio

Click on "Launch a studio free".

Step 3: Check Your Email

Verify your details and launch the PyTorch Studio.

Step 4: Start Using PyTorch Lightning

Follow the on-screen instructions to get started.

Step 5: Choose Your Platform

Select the platform you are comfortable with.

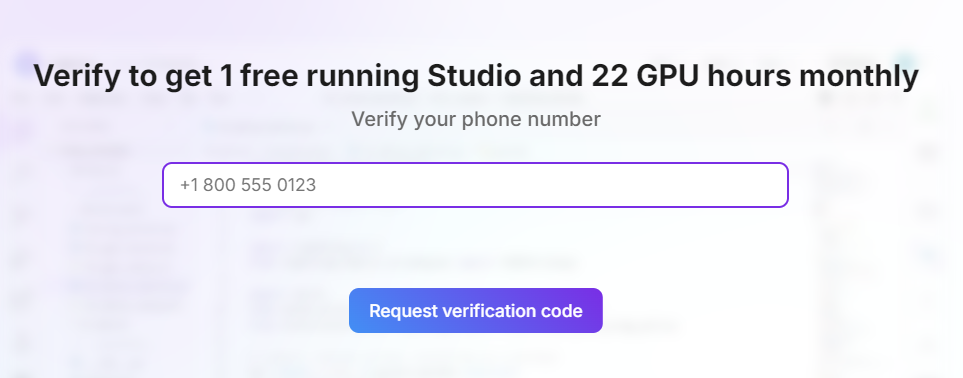

Step 6: Verify Your Mobile Number

Enter your mobile number for verification.

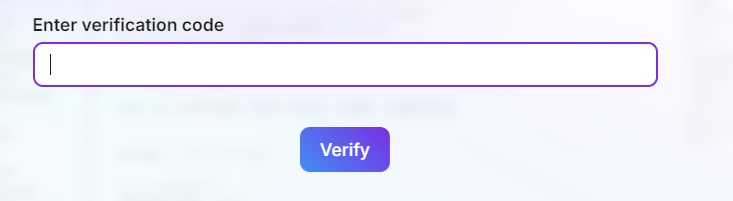

Step 7: Submit OTP

Enter the OTP sent by PyTorch.

Step 8: Complete Verification

Finish the verification process.

Step 9: Run PyTorch Lightning

You are now ready to use PyTorch Lightning for your projects.

Jupyter Notebook

Jupyter Notebook is a popular platform which runs the code of all Python libraries. When used with PyTorch Lightning, Jupyter Notebook provides tools for fast prototyping, development, training, and testing of deep learning models.

To install PyTorch Lightning in a Jupyter Notebook environment, follow these steps −

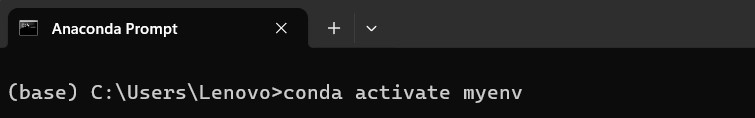

Step 1: Open Anaconda Prompt

Search for "Anaconda Prompt" in the Windows search bar and activate your environment.

conda activate myenv

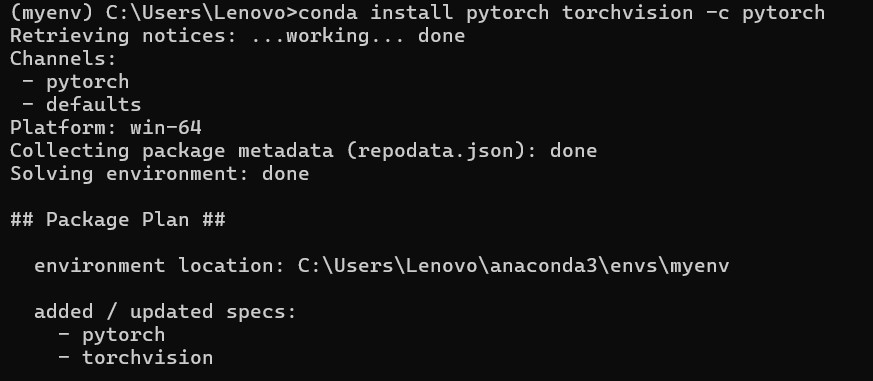

Step 2: Install PyTorch

Run the command.

conda install pytorch torchvision -c pytorch

Step 3: Install PyTorch Lightning

Run the command.

pip install pytorch-lightning

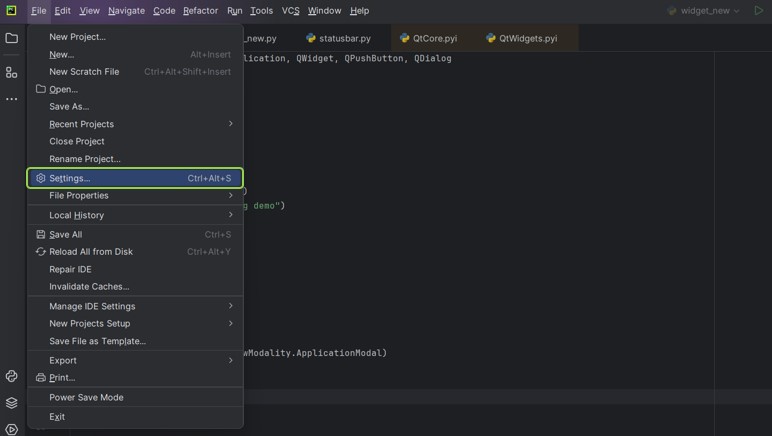

PyCharm

PyCharm is a popular IDE for Python development and can be used to deploy deep learning models with PyTorch Lightning.

Below is the instruction that guide to you install the PyTorch lightning on PyCharm Environment −

Step 1: Open PyCharm Settings

Go to "File" -> "Settings."

Step 2: Select Python Interpreter

Click on the Python interpreter option and press the "+" symbol.

Step 3: Search for PyTorch

Look for PyTorch in the search bar and select it.

Step 4: Install PyTorch Lightning

Search for "pytorch-lightning" and install the package.

Step 5: Verify Installation

The package is now installed, and you can start using PyTorch Lightning in your projects.