- Llama - Home

- Llama - Introduction

- Llama - Environment Setup

- Llama - Getting Started

- Llama - Data Preparation

- Llama - Training From Scratch

- Fine-Tuning Llama Model

- Llama - Evaluating Model Performance

- Llama - Optimizing Models

Llama Useful Resources

Getting Started with Llama

Llama stands for Large Language Model Meta AI. Founded by Meta AI, architecture has been advanced within transformers with design to handle more complex problems in natural language processing. Llama generates text in such a manner that it bestows on it human-like characteristics, improving the comprehension of language and much more, including text generation, translation, summarization, and more.

Llama is one kind of beast that manages to optimize performance on rather smaller datasets than its peer GPT-3 requires. It's designed to be efficient on smaller datasets, thereby becoming accessible to a much wider set of users yet scalable.

Overview of Llama Architecture

The Transformer model serves as the backbone architecture of Llama. It was first introduced by Vaswani et al. under the name "Attention is All You Need, but it is essentially an autoregressive model. This means that it generates text one token at a time, predicting the next word in the sequence given what has appeared up to the point.

Important Features of the Llama Architecture are as follows −

- Efficient Training − Llama can train on much smaller sets of data efficiently. So it's particularly great for research and applications where either available compute power is a limitation, or where data availability might be small.

- Auto-regressive Structure − It generates tokens one by one, making the text generated highly coherent because each next token is based on all the tokens it has so far.

- Multi-head Self-Attention − The attention mechanism of the model is designed in a way that assigns different weights to words within a sentence based on importance so it understands both local and global contexts within the input,

- Stacked Transformer Layers − Llama stacks many transformer blocks consisting of a self-attention mechanism followed by feedforward neural networks.

Why Llama?

Llama has achieved a reasonable level of computationally efficient fitting of its model capacity. It can generate very long coherent text streams and perform nearly any task, including question answering and summarization, all the way down to language translation, among other resource-frugal activities. Llama models are smaller and less expensive to run than some of the other large-scale language models, like GPT-3, so the work is thereby accessible to more people.

Llama Variants

Llama existed in various flavors, all of which were trained with a varying number of parameters −

- Llama-7B = 7 billion parameters

- Llama-13B = 13 billion parameters

- Llama-30B = 30 billion parameters

- Llama-65B = 65 billion parameters

In doing so, the user may pick the right variant of the model according to his hardware as well as requirements for the specific task.

Understanding Components of the Model

The functionality of Llama is built on just a few highly crucial components. Let's discuss each, taking into consideration how they intercommunicate to enhance the overall performance of the model.

Embedding Layer

The embedding layer of Llama is the mapping of the input tokens to high-dimensional vectors. It thus captures semantic relationships between words. The intuition behind this mapping is that in a continuous space of vectors, semantically similar tokens are closest to each other.

The embedding layer also readies the input for the transformational layers down the line by changing the shape of the tokens into dimensions that the transformational layers are expecting.

import torch import torch.nn as nn # Embedding layer embedding = nn.Embedding(num_embeddings=10000, embedding_dim=256) # Tokenized input (for example: "The future is bright") input_tokens = torch.LongTensor([2, 45, 103, 567]) # Output embedding embedding_output = embedding(input_tokens) print(embedding_output)

Output

tensor([[-0.4185, -0.5514, -0.8762, ..., 0.7456, 0.2396, 2.4756],

[ 0.7882, 0.8366, 0.1050, ..., 0.2018, -0.2126, 0.7039],

[ 0.3088, -0.3697, 0.1556, ..., -0.9751, -0.0777, -1.3352],

[ 0.7220, -0.7661, 0.2614, ..., 1.2152, 1.6356, 0.6806]],

grad_fn=<EmbeddingBackward0>)

This word embedding representation also allows the model to understand how the tokens are interlinked with each other in complex ways.

Self-Attention Mechanism

The self-attention of transformer models is the innovation where Llama will have the attention mechanism on parts of the sentence and understand how every word relates to others. Multi-head attention, in this case, is used by Llama, splitting the attention mechanism into multiple heads so that the model can freely explore parts of the input sequence.

Query, key, and value matrices are thus created, upon which the model chooses how much weightor attentionis given to each of the words relative to others.

import torch import torch.nn.functional as F # Sample query, key, value tensors queries = torch.rand(1, 4, 16) # (batch_size, seq_length, embedding_dim) keys = torch.rand(1, 4, 16) values = torch.rand(1, 4, 16) # Compute scaled dot-product attention scores = torch.bmm(queries, keys.transpose(1, 2)) / (16 ** 0.5) attention_weights = F.softmax(scores, dim=-1) # apply attention weights to values output = torch.bmm(attention_weights, values) print(output)

Output

tensor([[[0.4782, 0.5340, 0.4079, 0.4829, 0.4172, 0.5398, 0.3584, 0.6369,

0.5429, 0.7614, 0.5928, 0.5989, 0.6796, 0.7634, 0.6868, 0.5903],

[0.4651, 0.5553, 0.4406, 0.4909, 0.3724, 0.5828, 0.3781, 0.6293,

0.5463, 0.7658, 0.5828, 0.5964, 0.6699, 0.7652, 0.6770, 0.5583],

[0.4675, 0.5414, 0.4212, 0.4895, 0.3983, 0.5619, 0.3676, 0.6234,

0.5400, 0.7646, 0.5865, 0.5936, 0.6742, 0.7704, 0.6792, 0.5767],

[0.4722, 0.5550, 0.4352, 0.4829, 0.3769, 0.5802, 0.3673, 0.6354,

0.5525, 0.7641, 0.5722, 0.6045, 0.6644, 0.7693, 0.6745, 0.5674]]])

This attention mechanism makes it possible for the model to "attend" to different parts of the sequence, thereby allowing it to learn long-distance dependencies between words in the sentence.

Multi-Head Attention

Multi-head attention is the extension of self-attention in which multiple attention heads are applied in parallel. In doing so, each attention head picks on a different part of the input, making sure that all possibilities for dependencies in the data are realized.

Then it comes to a feed-forward network processing separately each of the attention results.

import torch

import torch.nn as nn

import torch.nn.functional as F

class MultiHeadAttention(nn.Module):

def __init__(self, dim_model, num_heads):

super(MultiHeadAttention, self).__init__()

self.num_heads = num_heads

self.dim_head = dim_model // num_heads

self.query = nn.Linear(dim_model, dim_model)

self.key = nn.Linear(dim_model, dim_model)

self.value = nn.Linear(dim_model, dim_model)

self.out = nn.Linear(dim_model, dim_model)

def forward(self, x):

B, N, C = x.shape

queries = self.query(x).reshape(B, N, self.num_heads, self.dim_head).transpose(1, 2)

keys = self.key(x).reshape(B, N, self.num_heads, self.dim_head).transpose(1, 2)

values = self.value(x).reshape(B, N, self.num_heads, self.dim_head).transpose(1, 2)

intention = torch.matmul(queries, keys.transpose(-2, -1)) / (self.dim_head ** 0.5)

attention_weights = F.softmax(intention, dim=-1)

out = torch.matmul(attention_weights, values).transpose(1, 2).reshape(B, N, C)

return self.out(out)

# Multiple attention building and calling

attention_layer = MultiHeadAttention(128, 8)

output = attention_layer(torch.rand(1, 10, 128)) # (batch_size, seq_length, embedding_dim)

print(output)

Output

tensor([[[-0.1015, -0.1076, 0.2237, ..., 0.1794, -0.3297, 0.1177],

[-0.1028, -0.1068, 0.2219, ..., 0.1798, -0.3307, 0.1175],

[-0.1018, -0.1070, 0.2228, ..., 0.1793, -0.3294, 0.1183],

...,

[-0.1021, -0.1075, 0.2245, ..., 0.1803, -0.3312, 0.1171],

[-0.1041, -0.1070, 0.2232, ..., 0.1817, -0.3308, 0.1184],

[-0.1027, -0.1087, 0.2223, ..., 0.1801, -0.3295, 0.1179]]],

grad_fn=<ViewBackward0>)

Feed-Forward Network

The feed-forward network is perhaps the most trivial yet fundamentally important building block of transformer blocks. By the name itself, it applies some form of non-linear transformation to the input sequence; thus, the model is allowed to learn more complex patterns.

Every layer of Llama's attention uses a feed-forward network for this transformation.

class FeedForward(nn.Module):

def __init__(self, dim_model, dim_ff):

super(FeedForward, self).__init__() #This line was incorrectly indented

self.fc1 = nn.Linear(dim_model, dim_ff)

self.fc2 = nn.Linear(dim_ff, dim_model)

self.relu = nn.ReLU()

def forward(self, x):

return self.fc2(self.relu(self.fc1(x)))

# define and use the feed-forward network

ffn = FeedForward(128, 512)

ffn_output = ffn(torch.rand(1, 10, 128)) # (batch_size, seq_length, embedding_dim)

print(ffn_output)

Output

tensor([[[ 0.0222, -0.1035, -0.1494, ..., 0.0891, 0.2920, -0.1607],

[ 0.0313, -0.2393, -0.2456, ..., 0.0704, 0.1300, -0.1176],

[-0.0838, -0.0756, -0.1824, ..., 0.2570, 0.0700, -0.1471],

...,

[ 0.0146, -0.0733, -0.0649, ..., 0.0465, 0.2674, -0.1506],

[-0.0152, -0.0657, -0.0991, ..., 0.2389, 0.2404, -0.1785],

[ 0.0095, -0.1162, -0.0693, ..., 0.0919, 0.1621, -0.1421]]],

grad_fn=<ViewBackward0>)

Steps to Create a Token for Using the Llama Models

Before accessing the Llama models, you need to create token on Hugging face. We are using Llama 2 models for its lightweight. You can choose any model. Please follow the below steps to get started.

Step 1: Sign-up for Hugging Face account (if you haven't done so)

- On the Hugging Face home page, click on Sign Up.

- For all of you who haven't yet created an account, create one now

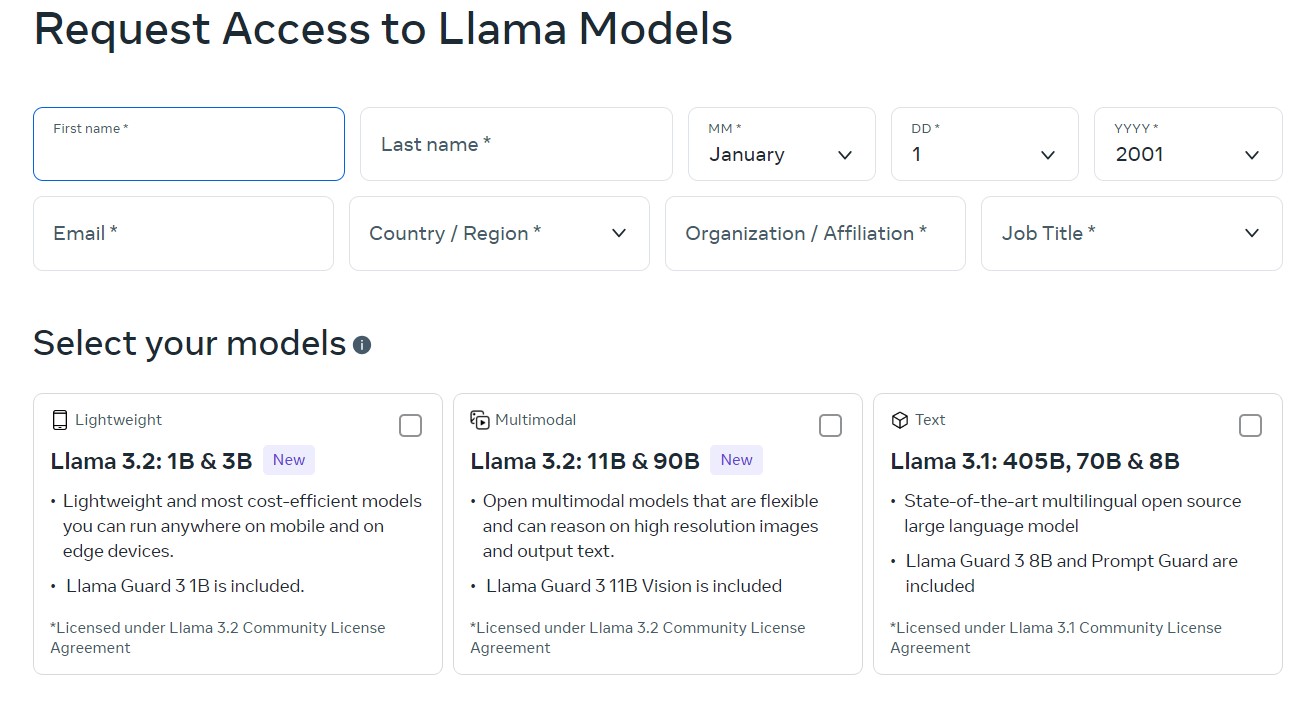

Step 2: Fill out Request Form to Access to Llama Models

To download and use the Llama models you need to fill up a request form. For this −

- Go to Llama downloads page, and fill all the required fields.

- Select your model (here we will use Llama 2 for sake of simplicity and light weight) and click on next the form.

- Accept the Llama 2 terms and conditions and click on Accept and continue.

- You are all set.

Step 3: Obtain Access Token

- Go to your Hugging Face account.

- Click the photo of your profile on top right-hand side to find yourself on Settings

- Navigate to Access Tokens

- Click on Create new token

- Name it for example "Llama Access Token"

- Tick the user permissions. The scope should be at least set as read to access gated models.

- Click create token

- Copy the token, you will use that for your next step.

Step 4: Authentication in Your Script with the Token

Once you have your Hugging Face token, you must authenticate with this token in your Python script.

First of all, if you haven't done so already, install the required packages −

!pip install transformers huggingface_hub torch

Import the login method from Hugging Face Hub and login using your token −

from huggingface_hub import login # Set your_token to your token login(token=" <your_token>")

Or, if you do not wish to log in interactively, you can directly pass your token in the code at the time you load your model.

Step 5: Update the Code to Load the Model Using the Token

Loading the gated model using your token.

The token can be passed directly to the from_pretrained() method.

from transformers import AutoModelForCausalLM, AutoTokenizer

from huggingface_hub import login

token = "your_token"

# Login with your token (put <your_token> in quotes)

login(token=token)

# Loading tokenizer and model from gated repository and using auth token

tokenizer = AutoTokenizer.from_pretrained('meta-llama/Llama-2-7b-hf', token=token)

model = AutoModelForCausalLM.from_pretrained('meta-llama/Llama-2-7b-hf', token=token)

Step 6: Run the Code

With your token inserted and logged or passed during model-loading functions, your script should now be able to access the gated repository and feed text from the Llama model.

Running Your First Llama Script

We have created tokens and other authentications; now it is time to run your very first Llama script. You can use a pre-trained Llama model for text generation. We are using Llama-2-7b-hf, one of the Llama 2 models.

from transformers import AutoModelForCausalLM, AutoTokenizer

#import tokenizer and model

tokenizer = AutoTokenizer.from_pretrained('meta-llama/Llama-2-7b-hf', token=token)

model = AutoModelForCausalLM.from_pretrained('meta-llama/Llama-2-7b-hf', token=token)

#Encode input text and generate

input_text = "The future of AI is"

input_ids = tokenizer.encode(input_text, return_tensors="pt")

outputs = model.generate(input_ids, max_length=50, num_return_sequences=1)

# Decode and print output

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Output

The future of AI is a subject of great interest, and it is not surprising that many people are interested in the subject. It is a very interesting topic, and it is a subject that is likely to be discussed for many years to come

Generate Text − The above script generates a text sequence that represents how Llama can interpret the context as well as create coherent writing.

Summing Up

Impressive due to its transformer-based architecture, multi-head attention, and autoregressive generation capabilities. The balance struck between computational efficiency and how well the model performs makes Llama applicable to a broad range of natural language processing tasks. Familiarity with the most important components and architecture of Llama will give you the chance to try generating text, translation, summarization, and much, much more.