- Chainer - Home

- Chainer - Introduction

- Chainer - Installation

- Chainer Basic Concepts

- Chainer - Neural Networks

- Chainer - Creating Neural Networks

- Chainer - Core Components

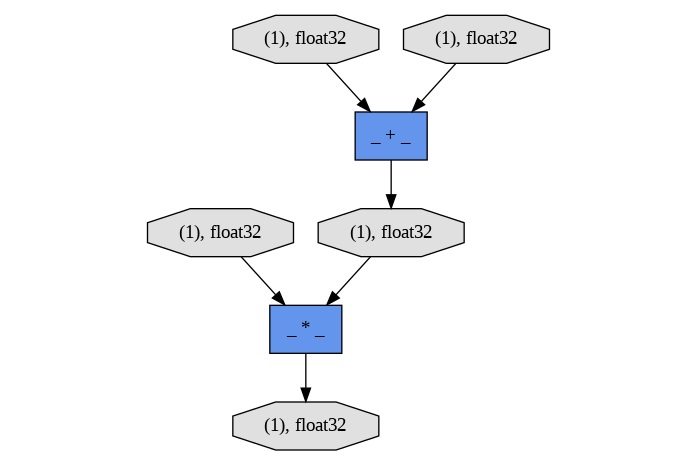

- Chainer - Computational Graphs

- Chainer - Dynamic vs Static Graphs

- Chainer - Forward & Backward Propagation

- Chainer - Training & Evaluation

- Chainer - Advanced Features

- Chainer - Integration with Other Frameworks

- Chainer Useful Resources

- Chainer - Quick Guide

- Chainer - Useful Resources

- Chainer - Discussion

Chainer - Quick Guide

Chainer - Introduction

Chainer is a deep learning framework that prioritizes flexibility and ease of use. One of its standout features is the define-by-run approach where the computational graph is generated dynamically as the code runs rather than being defined upfront. This approach contrasts with more rigid frameworks and allows for greater adaptability, particularly when developing complex models like recurrent neural networks (RNNs) or models that involve conditional operations.

The Chainer Framework is designed to be accessible to both novice and experienced developers, Chainer integrates smoothly with NumPy and efficiently leverages GPU resources for handling large-scale computations. Its ecosystem is robust by offering extensions such as ChainerMN for distributed learning, ChainerRL for reinforcement learning and ChainerCV for computer vision tasks by making it suitable for a wide array of applications.

Chainer's framework is combination of flexibility and a strong ecosystem has made it a popular choice in academic research and industry, especially in Japan where it was first developed. Despite the rise of other frameworks the Chainer remains a powerful tool for those who need a dynamic and user-friendly platform for deep learning.

Key Features of Chainer

Following are the Key features of the Chainer Frame −

- Dynamic Graph Construction (Define-by-Run): When compared to static frameworks, Chainer constructs its computational graph on-the-fly as operations are executed. This dynamic approach enhances flexibility by making it easier to implement complex models such as those involving loops or conditional statements.

- Integration with NumPy: Chainer seamlessly integrates with NumPy by allowing users to leverage familiar array operations and simplifying the process of transitioning from scientific computing to deep learning.

- GPU Optimization: This framework is designed to make efficient use of GPUs which accelerates the training of large-scale models and computations which are critical for handling complex neural networks and extensive datasets.

- Comprehensive Ecosystem: Chainer's ecosystem includes various tools and extensions such as ChainerMN for distributed computing, ChainerRL for reinforcement learning and ChainerCV for tasks in computer vision which broaden its applicability across different fields.

- Customizability: Users can easily create custom components such as layers and loss functions by providing extensive control over the design and behavior of neural networks.

Advantages of Chainer

The Chainer Frame work has many advantages, which helps the users to work effectively. Let's see them in detail as below −

- Adaptability: The Chainer Frame work is more ability to dynamically build and modify the computational graph as needed makes Chainer highly adaptable, facilitating experimentation with novel architectures and models.

- Ease of Use: Chainer's straightforward design and its compatibility with NumPy make it accessible for users at various experience levels, from beginners to advanced practitioners.

- Effective GPU Utilization: By harnessing GPU power the Chainer efficiently manages the demands of training deep learning models by improving performance and reducing computation time.

- Strong Community and Support:Chainer benefits from an active user community and ongoing support particularly in Japan, which helps in troubleshooting and continuously improving the framework.

- Versatile Applications: The Chainer's Framework extensive range of extensions and tools allows Chainer to be used effectively across different domains, from basic machine learning tasks to complex deep learning applications.

Applications of Chainer in Machine Learning

Chainer Framework offers a versatile platform for a wide range of machine learning applications which makes it a powerful tool for developing and deploying advanced models across various domains.

- Neural Network Construction: Chainer is well-suited for developing various neural network architectures such as feedforward, convolutional and recurrent networks. Its dynamic graph creation process allows for flexible and efficient model design which is even for complex structures.

- Computer Vision: Chainer excels in computer vision tasks, particularly with the ChainerCV extension which supports image classification, object detection and segmentation. It leverages deep learning models to effectively process and analyze visual data.

- Natural Language Processing (NLP): Chainer's adaptability makes it ideal for NLP applications such as text classification, language modeling and translation. It supports advanced models like transformers and RNNs, crucial for understanding and generating human language.

- Reinforcement Learning: The ChainerRL extension equips Chainer to handle reinforcement learning tasks by enabling the development of algorithms where agents learn to make decisions in various environments, utilizing techniques such as Q-learning and policy gradients.

- Generative Modeling: Chainer is capable of building and training generative models such as GANs and VAEs. These models are used to create synthetic data that closely mimics real-world datasets.

- Time Series Analysis: With the support for RNNs and LSTMs, Chainer is effective in time series analysis by making it suitable for forecasting in fields like finance and weather prediction, where data sequences are key.

- Automated Machine Learning (AutoML): Chainer is also used in AutoML tasks, automating the selection of models and tuning of hyperparameters. This automation streamlines the machine learning workflow by optimizing the process for better results.

- Distributed Training: ChainerMN allows Chainer to perform distributed training across multiple GPUs or nodes by making it possible to scale machine learning models efficiently and handle large-scale datasets.

- Research and Development: Chainer is highly valued in research settings for its flexibility and ease of experimentation by enabling rapid prototyping and testing of new machine learning concepts and algorithms.

Chainer - Installation

Chainer is a versatile deep learning framework that enables dynamic graph construction by making it suitable for a wide range of machine learning tasks. Whether we are new to deep learning or an experienced developer, setting up Chainer on our system is a simple process.

Installation and Setup of Chainer

Let's go through the steps for installation and setup which ensures us fully equipped to begin building deep learning models with Chainer −

Prerequisites

Before we are installing Chainer, we should ensure that our system meets the following prerequisites −

Python

Chainer supports Python 3.5 and above. It's recommended to use Python 3.7 or later for the best compatibility and performance.

We should ensure Python is installed on our system. We can download the latest version from the official Python Website. We Python already installed in our system we can verify our installation by running the following code −

python --version

Following is the python version installed in the system −

Python 3.12.5

Pip

Pip is the package manager used to install Chainer and its dependencies in our working environment. Generally Pip comes with Python, but we can install and upgrade it with the help of below code −

python -m ensurepip --upgrade

Below is the output of the above code execution −

Defaulting to user installation because normal site-packages is not writeable Looking in links: c:\Users\91970\AppData\Local\Temp\tmpttbcugpx Requirement already satisfied: pip in c:\program files\windowsapps\pythonsoftwarefoundation.python.3.12_3.12.1520.0_x64__qbz5n2kfra8p0\lib\site-packages (24.2)

After installation and upgradation we can check the version of the installed pip by executing the below code −

pip --version

Following is the version of the pip installed in the system −

pip 24.2 from C:\Program Files\WindowsApps\PythonSoftwareFoundation.Python.3.12_3.12.1520.0_x64__qbz5n2kfra8p0\Lib\site-packages\pip (python 3.12)

Installing Chainer

Once we've met the prerequisites we can proceed with installing Chainer. The installation process is straightforward and can be +one using two methods such as pip and Pythons package manager. Here's how we can do it −

Installing Chainer with CPU Support

-If we want to install Chainer without GPU support, we can install it directly with the help of pip. The following command can be used to install the latest version of Chainer along with the necessary dependencies. This is suitable for systems that don't need the GPU acceleration −

pip install chainer

Below is the output of installation of Chainer Framework −

Defaulting to user installation because normal site-packages is not writeable Requirement already satisfied: chainer in c:\users\91970\appdata\local\packages\pythonsoftwarefoundation.python.3.12_qbz5n2kfra8p0\localcache\local-packages\python312\site-packages (7.8.1) ............................ ............................ ............................ Requirement already satisfied: six>=1.9.0 in c:\users\91970\appdata\local\packages\pythonsoftwarefoundation.python.3.12_qbz5n2kfra8p0\localcache\local-packages\python312\site-packages (from chainer) (1.16.0)

Installing Chainer with GPU Support

If we want to take advantage of GPU acceleration we need to install Chainer with CUDA support. The version of Chainer we install should match the version of CUDA installed on our system.

We can install the different versions of chainer by replacing the version number with the one corresponding to our installed CUDA version.

For CUDA 9.0

If we want to install Chainer with the help of CUDA 9.0 support with the help of below command. This command ensures that Chainer is installed along with the necessary dependencies to utilize GPU acceleration with CUDA 9.0.

pip install chainer[cuda90]

For CUDA 10.0

Here we can use the below command to install the chainer, which tells pip to install Chainer along with the libraries required for CUDA 10.0 support. The 100 corresponds to CUDA version 10.0 −

pip install chainer[cuda100]

For CUDA 10.1

Following command specifies that Chainer should be installed with the libraries required to support CUDA 10.1. The 101 corresponds to CUDA version 10.1.

pip install chainer[cuda101]

For CUDA 11.0 and later

Below is the command specifies that Chainer should be installed with support for CUDA 11.0. The 110 corresponds to CUDA version 11.0.

pip install chainer[cuda110]

Verifying the Installation

After installing Chainer it's important to ensure that the installation was successful and that Chainer is ready to use. We can test the installation by running the python script with the code as mentioned below −

import chainer print(chainer.__version__)

Below is the version of the Chainer framework installed in the system −

7.8.1

Installing Additional Extensions

Chainer comes with several optional extensions that are useful for specific tasks. Depending on our project needs we might have to install as follows −

-

ChainerMN: A tool for distributed deep learning by enabling model training across multiple GPUs or nodes.

pip install chainermn

-

ChainerRL: A suite designed for reinforcement learning by offering resources to develop and train reinforcement learning algorithms.

pip install chainerrl

-

ChainerCV: For computer vision applications which includes tools and models for tasks like object detection and image segmentation.

pip install chainercv

Setting up the Virtual Environment

Using a virtual environment is recommended to isolate our Chainer installation and its dependencies from other Python projects. To avoid conflicts with other Python packages, it's a good idea to use a virtual environment. Below is the code of installing the virtual environment −

pip install virtualenv

Now after installing, we can create the virtual environment with the help of below code −

virtualenv chainer_env

Here we are activating the virtual environment by executing the below code on windows platform −

chainer_env\Scripts\activate

If we want to activate the virtual environment in the MacOs/Linux, then we have to execute the below code −

source chainer_env/bin/activate

Now install the chainer framework in the virtual environment with the below code −

pip install chainer

Troubleshooting Common Installation Issues

- CUDA Compatibility: Ensure that the version of CUDA installed on our system matches the one specified during the Chainer installation. Mismatches can cause runtime errors.

- Dependency Conflicts: If we encounter issues with dependencies then try updating pip with pip install --upgrade pip and reinstalling Chainer.

By following all the above mentioned steps the Chainer will be successfully installed on our system by allowing us to start developing and training deep learning models. Whether were working with CPUs or GPUs, Chainer provides the flexibility and power we need for a wide range of machine learning tasks.

Chainer - Neural Networks

Neural networks are computational models inspired by the human brain's structure and function. They consist of interconnected layers of nodes i.e. neurons, where each node processes input data and passes the result to the next layer. The network learns to perform tasks by adjusting the weights of these connections based on the error of its predictions.

This learning process is often called training which enables neural networks to identify patterns, classify data and make predictions. They are widely used in machine learning for tasks such as image recognition, natural language processing and more.

Structure of a Neural Network

A neural network is a computational model that mimics the way neurons in the human brain work. It is composed of layers of nodes known as neurons, which are connected by edges or weights. A typical neural network has an input layer, one or more hidden layers and an output layer. Following is the detailed structure of a Neural network −

Input Layer

The Input layer is the first layer in a neural network and serves as the entry point for the data that will be processed by the network. It doesnt perform any computations rather, it passes the data directly to the next layer in the network.

Following are the key characteristics of the input layer −

- Nodes/Neurons: Each node in the input layer represents a single feature from the input data. For example if we have an image with 28x28 pixels then the input layer would have 784 nodes i.e. one for each pixel.

- Data Representation: The input data is often normalized or standardized before being fed into the input layer to ensure that all features have the same scale which helps in improving the performance of the neural network.

- No Activation Function: Unlike the hidden and output layers the input layer does not apply an activation function. Its primary role is to distribute the raw input features to the subsequent layers for further processing.

Hidden layers

Hidden layers are situated between the input layer and the output layer in a neural network. They are termed "hidden" because their outputs are not directly visible in the input data or the final output predictions.

The primary role of these layers is to process and transform the data through multiple stages by enabling the network to learn complex patterns and features. This transformation is achieved through weighted connections and non-linear activation functions which allow the network to capture intricate relationships within the data.

Following are the key characteristics of the input layer −

- Nodes/Neurons: Each hidden layer consists of multiple neurons which apply weights to the inputs they receive and pass the results through an activation function. The number of neurons and layers can vary depending on the complexity of the task.

- Weights and Biases: Each neuron in a hidden layer has associated weights and biases which are adjusted during the training process. These parameters help the network learn the relationships and patterns in the data.

-

Activation Function: Hidden layers typically use activation functions to introduce non-linearity into the model. Common activation functions are mentioned below −

- ReLU (Rectified Linear Unit): ReLU()=max(0,)

- Sigmoid: ()=1/(1+e-x)

- Tanh (Hyperbolic Tangent): tanh(x) = (ex - e-x)/(ex + e-x)

- Leaky ReLU: Leaky ReLU(x) = max(0.01x,x)

- Learning and Feature Extraction: Hidden layers are where most of the learning occurs. They transform the input data into representations that are more suitable for the task at hand. Each successive hidden layer builds on the features extracted by the previous layers which allows the network to learn complex patterns.

- Depth and Complexity: The number of hidden layers and neurons in each layer determine the depth and complexity of the network. More hidden layers and neurons generally allow the network to learn more intricate patterns but also increase the risk of overfitting and require more computational resources.

Output Layer

The output layer is the final layer in a neural network that produces the network's predictions or results. This layer directly generates the output corresponding to the given input data based on the transformations applied by the preceding hidden layers.

The number of neurons in the output layer typically matches the number of classes or continuous values the model is expected to predict. The output is often passed through an activation function such as softmax for classification tasks to provide a probability distribution over the possible classes.

Following are the key characteristics of the output layer −

- Nodes/Neurons: The number of neurons in the output layer corresponds to the number of classes or target variables in the problem. For example if in a binary classification problem then there would be one neuron or two neurons in some setups. In a multi-class classification problem with 10 classes then there would be 10 neurons.

-

Activation Function: These in the output layer play a crucial role in shaping the final output of a neural network by making them appropriate for the specific type of prediction task such as classification, regression, etc. The choice of activation function directly influences the interpretation of the network's predictions. Common activation functions are mentioned below −

- Classification Tasks: Commonly use the softmax activation function for multi-class classification which converts the output to a probability distribution over the classes or sigmoid for binary classification.

- Regression Tasks: Typically use a linear activation function as the goal is to predict a continuous value rather than a class.

- Tanh (Hyperbolic Tangent): tanh(x) = (ex - e-x)/(ex + e-x)

- Leaky ReLU: Leaky ReLU(x) = max(0.01x,x)

- Output: The output layer delivers the final result of the network which may be a probability, a class label or a continuous value which depends on the type of task. In classification tasks the neuron with the highest output value typically indicates the predicted class.

Types of Neural Networks

Neural networks come in various architectures with each tailored to specific types of data and tasks. Here's a detailed overview of the primary types of neural networks −

Feedforward Neural Networks (FNNs)

Feedforward Neural Networks (FNNs) are a fundamental class of artificial neural networks characterized by their unidirectional flow of information. In these networks the data travels in a single direction i.e. from the input layer, through any hidden layers and finally to the output layer. This architecture ensures that there are no cycles or loops in the connections between nodes (neurons).

Following are the key features of FNNs −

-

Architecture: FNNs are composed of three principal layers as mentioned below −

- Input Layer: This layer receives the initial data features.

- Hidden Layers: Intermediate layers that process the data and extract relevant features. Neurons in these layers apply activation functions to their inputs.

- Output Layer: This final layer produces the network's output which can be a classification label, probability or a continuous value.

- Forward Propagation: Data moves through the network from the input layer to the output layer. Each neuron processes its input and transmits the result to the next layer.

- Activation Functions: These functions introduce non-linearity into the network by allowing it to model more complex relationships. Typical activation functions include ReLU, sigmoid and tanh.

- Training: FNNs are trained using methods like backpropagation and gradient descent. This process involves updating the network's weights to reduce the error between the predicted and actual outcomes.

- Applications: FNNs are employed in various fields such as image recognition, speech processing and regression analysis.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a specialized type of neural network designed to process data with a grid-like topology such as images. They are particularly effective for tasks involving spatial hierarchies and patterns such as image and video recognition.

Following are the key features of the CNNs −

-

Architecture: CNNs are composed of three principal layers as defined below −

- Convolutional Layers: These layers apply convolutional filters to the input data. Each filter scans the input to detect specific features such as edges or textures. The convolution operation produces feature maps that highlight the presence of these features.

- Pooling Layers: This layer is also known as subsampling or downsampling layers. The pooling layers reduce the spatial dimensions of feature maps while retaining essential information. Common pooling operations include max pooling which selects the maximum value and average pooling which computes the average value.

- Fully Connected Layers: After several convolutional and pooling layers, the high-level feature maps are flattened into a one-dimensional vector and passed through fully connected layers. These layers perform the final classification or regression based on the extracted features.

- Forward Propagation: In CNNs the data moves through the network in a series of convolutional, pooling and fully connected layers. Each convolutional layer detects features while pooling layers reduce dimensionality and fully connected layers make final predictions.

- Activation Functions: CNNs use activation functions like ReLU (Rectified Linear Unit) to introduce non-linearity which helps the network learn complex patterns. Other activation functions like sigmoid and tanh may also be used depending on the task.

- Training: CNNs are trained using techniques such as backpropagation and optimization algorithms like stochastic gradient descent (SGD). During training the network learns the optimal values for convolutional filters and weights to minimize the error between predicted and actual outcomes.

- Applications: CNNs are widely used in computer vision tasks such as image classification, object detection and image segmentation. They are also applied in fields like medical image analysis and autonomous driving where spatial patterns and hierarchies are crucial.

Long Short-Term Memory Networks (LSTMs)

LSTMs are a type of Recurrent Neural Network (RNN) designed to address specific challenges in learning from sequential data, particularly the problems of long-term dependencies and vanishing gradients. They enhance the basic RNN architecture by introducing specialized components that allow them to retain information over extended periods.

Following are the key features of the LSTMs −

-

Architecture: Below are the details of the architechure of LSTMs Networks −

- Cell State: LSTMs include a cell state that acts as a memory unit by carrying information across different time steps. This state is updated and maintained through the network by allowing it to keep relevant information from previous inputs.

-

Gates: LSTMs use gates to control the flow of information into and out of the cell state. These gates include −

- Forget Gate: This gate determines which information from the cell state should be discarded.

- Input Gate: This controls the addition of new information to the cell state.

- Output Gate: This gate regulates what part of the cell state should be outputted and passed to the next time step.

- Hidden State:In addition to the cell state, the LSTMs maintain a hidden state that represents the output of the network at each time step. The hidden state is updated based on the cell state and influences the predictions made by the network.

- Forward Propagation: During forward propagation the LSTMs process the input data step-by-step by updating the cell state and hidden state as they go. The gates regulate the information flow ensuring that relevant information is preserved while irrelevant information is filtered out. The final output at each time step is derived from the hidden state which incorporates information from the cell state.

- Activation Functions: LSTMs use activation functions such as sigmoid and tanh to manage the gating mechanisms and update the cell and hidden states. The sigmoid function is used to compute the gates while tanh is applied to regulate the values within the cell state.

- Training: LSTMs are trained using backpropagation through time (BPTT) which are similar to other RNNs. This process involves unfolding the network across time steps and applying backpropagation to update the weights based on the error between the predicted and actual outputs. LSTMs mitigate issues like vanishing gradients by effectively managing long-term dependencies by making them more suitable for tasks requiring memory of past inputs.

-

Applications: LSTMs are particularly useful for tasks involving complex sequences and long-term dependencies including: −

- Natural Language Processing (NLP): For tasks such as language modeling, machine translation, and text generation, where understanding context over long sequences is crucial.

- Time Series Forecasting: Predicting future values in data with long-term trends such as stock market analysis or weather prediction.

- Speech Recognition: Converting spoken language into text by analyzing and retaining information from audio sequences over time.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are specialized for handling sequential data by using internal memory through hidden states. This capability makes them ideal for tasks where understanding the sequence or context is essential such as in language modeling and time series prediction.

Following are the key features of the RNNs −

-

Architecture: RNNs are composed of two principal layers which are given below −

- Recurrent Layers: RNNs are characterized by their looping connections within the network by enabling them to maintain and update a memory of past inputs via a hidden state. This feature allows the network to use information from previous steps to influence current and future predictions.

- Hidden State: This serves as the network's internal memory which is updated at each time step. It retains information from earlier inputs and impacts the processing of subsequent inputs.

- Forward Propagation: Data in RNNs progresses sequentially through the network. At each time step the network processes the current input, updates the hidden state based on the previous inputs and generates an output. The updated hidden state is then used for processing the next input.

- Activation Functions: To model complex patterns and introduce non-linearity the RNNs use activation functions such as tanh or ReLU. Advanced RNN variants like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) include additional mechanisms to better manage long-term dependencies and address challenges such as vanishing gradients.

- Training: RNNs are trained through a method called backpropagation through time (BPTT). This involves unfolding the network across time steps and applying backpropagation to adjust weights based on the discrepancy between predicted and actual outputs. Training RNNs can be difficult due to issues like vanishing gradients which are often mitigated by using advanced RNN architectures.

- Applications: RNNs are particularly effective for tasks involving sequential data such as −

- Natural Language Processing (NLP): Applications such as text generation, machine translation, and sentiment analysis.

- Time Series Forecasting: Predicting future values in sequences, such as stock prices or weather conditions.

- Speech Recognition: Converting spoken language into text by analyzing sequences of audio data.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a class of machine learning frameworks designed to generate realistic data samples. GANs consist of two neural networks one is a generator and other is a discriminator which are trained together in a competitive setting. This adversarial process allows GANs to produce data that closely mimics real-world data.

Following are the key features of the GANs −

-

Architecture: GANs are mainly consists of two networks in their architecture −

- Generator: The generator's role is to create fake data samples from random noise. It learns to map this noise to data distributions similar to the real data. The generator's goal is to create data that is indistinguishable from real data in the eyes of the discriminator.

- Discriminator: The discriminator's role is to distinguish between real data (from the actual dataset) and fake data (produced by the generator). It outputs a probability indicating whether a given sample is real or fake. The discriminator aims to correctly classify the real and fake samples.

- Adversarial Process: The process of training the Generators and Discriminators at the same time is known as Adversarial Process. Let's see the important processes in GANs −

- Generator Training: The generator creates a batch of fake data samples and sends them to the discriminator and trying to fool it into thinking they are real.

- Discriminator Training: The discriminator receives both real data and fake data from the generator and it tries to correctly identify which is fake and real data.

- Loss Functions: The generator's loss is based on how well it can fool the discriminator while the discriminator's loss is based on how accurately it can distinguish real from fake data. The networks are updated alternately with the generator trying to minimize its loss and the discriminator trying to maximize its accuracy.

- Convergence: The training process continues until the generator produces data so realistic that the discriminator can no longer distinguish between real and fake samples with high accuracy. At this point the generator has learned to produce outputs that closely resemble the original data distribution.

- Applications: GANs have found extensive applications across multiple domains as mentioned below −

- Image Generation: Producing realistic images, such as generating lifelike human faces or creating original artwork.

- Data Augmentation: Increasing the diversity of training datasets for machine learning models, particularly useful in situations with limited data.

- Style Transfer: Transforming the style of one image to another, like converting a photograph into the style of a specific painting.

- Super-Resolution: Improving the resolution of images by generating detailed, high-resolution outputs from low-resolution inputs.

Autoencoders

Autoencoders are a type of artificial neural network used primarily for unsupervised learning. They are designed to learn efficient representations of data, typically for dimensionality reduction or feature learning. An autoencoder consists of two main parts namely, the encoder and the decoder. The goal is to encode the input data into a lower-dimensional representation (latent space) and then reconstruct the original input from this compressed representation.

Following are the key features of the Autoencoders −

- Architecture: Following are the elements included in the architecture of the Autoencoders −

- Encoder: The encoder compresses the input data into a smaller with latent representation. This process involves mapping the input data to a lower-dimensional space through one or more hidden layers. The encoder's layers use activation functions such as ReLU or sigmoid to transform the input into a compact representation that captures the essential features of the data.

- Latent Space (Bottleneck): The latent space is the compressed with low-dimensional representation of the input data. It acts as a bottleneck that forces the network to focus on the most important features of the data, filtering out noise and redundancy. The size of the latent space determines the degree of compression. A smaller latent space leads to more compression but may lose some information while a larger latent space retains more detail.

- Decoder: The decoder rebuilds the original input data from the latent representation. It has a structure that mirrors the encoder and progressively expanding the compressed data back to its original size. The output layer of the decoder usually employs the same activation function as the input data to produce the final reconstructed output.

- Training: Autoencoders are trained using backpropagation with the objective of minimizing the difference between the original input and the reconstructed output. The loss function used is often mean squared error (MSE) or binary cross-entropy depending on the nature of the input data. The network adjusts its weights during training to learn an efficient encoding that captures the most significant features of the input while being able to reconstruct it accurately.

-

Applications: Autoencoders are versatile tools in machine learning which can be applied in various fields such as −

- Dimensionality Reduction: They help in compressing data by reducing the number of features while retaining crucial information.

- Anomaly Detection: Autoencoders can identify anomalies by recognizing patterns that differ significantly from normal data typically through reconstruction errors.

- Data Denoising: They are effective in removing noise from images, signals or other data types.

- Generative Models: Especially with Variational Autoencoders (VAEs) autoencoders can generate new data samples that closely resemble the original dataset.

Graph Neural Networks (GNNs)

Graph Neural Networks (GNNs) are a specialized type of neural network designed to work with data that is organized in graph structures. In a graph the data is represented as nodes (vertices) connected by edges (relationships).

GNNs utilize this graph-based structure to learn and make predictions by making them particularly useful for tasks where data naturally forms a graph. By effectively capturing the relationships and dependencies between nodes, GNNs excel in tasks that involve complex, interconnected data.

Following are the key features of the GNNs −

- Architecture: Here are the components that are included in the Graph Neural Networks (GNNs)

- Node Representation: Each node in the graph has an initial feature vector representing its attributes. These feature vectors are updated through the network's layers.

- Message Passing: GNNs use a message-passing mechanism where each node exchanges information with its neighboring nodes. This step allows the network to aggregate information from neighboring nodes to update its own representation.

- Aggregation Function: An aggregation function combines the messages received from neighboring nodes. Common aggregation methods include summing, averaging or applying more complex operations.

- Update Function: After aggregation the node's feature vector is updated using a function that often includes neural network layers such as fully connected layers or activation functions.

- Readout Function: The final representation of the graph or nodes can be obtained through a readout function which might aggregate the node features into a global graph representation or compute final predictions.

-

Training: The GNNs use below mentioned methods for training purpose −

- Loss Function: GNNs are trained with loss functions specific to their tasks such as node classification, graph classification or link prediction. The loss function quantifies the difference between the predicted outputs and the actual ground truth.

- Optimization: The training process involves optimizing the network's weights using gradient-based optimization algorithms. Common methods such as stochastic gradient descent (SGD) and Adam. These methods adjust the weights to minimize the loss by improving the model's accuracy and performance on the given task.

- Applications: Below are the applications where GNNs are used −

- Node Classification: Assigning labels or categories to individual nodes based on their features and the overall graph structure. This is useful for tasks such as identifying types of entities within a network.

- Graph Classification: Categorizing entire graphs into different classes. This can be applied in scenarios like classifying molecules in chemistry or categorizing different types of social networks.

- Link Prediction: Forecasting the likelihood of connections or edges forming between nodes. This is valuable in recommendation systems such as predicting user connections or suggesting products.

- Graph Generation: Creating new graphs or structures from learned patterns. This is beneficial in fields like drug discovery where new molecular structures are proposed based on existing data.

- Social Network Analysis: Evaluating social interactions within a network to identify influential nodes, detect communities or predict social dynamics and trends.

Chainer - Core Components

Chainer is a versatile deep learning framework designed to facilitate the development and training of neural networks with ease. The core components of Chainer provide a robust foundation for building complex models and performing efficient computations.

In chainer the core component the Chain class is used for managing network layers and parameters such as Links and Functions for defining and applying model operations and the Variable class for handling data and gradients.

Additionally the Chainer incorporates powerful Optimizers for updating model parameters, utilities for managing xDataset and DataLoader and a dynamic Computational Graph that supports flexible model architectures. Together all these components enable streamlined model creation, training and optimization by making Chainer a comprehensive tool for deep learning tasks.

Here are the different core components of the Chainer Framework −

Variables

In Chainer the Variable class is a fundamental building block that represents data and its associated gradients during the training of neural networks. A Variable encapsulates not only the data such as inputs, outputs or intermediate computations but also the information required for automatic differentiation which is crucial for backpropagation.

Key Features of Variable

Below are the key features of the variables in the Chainer Framework −

- Data Storage: A Variable holds the data in the form of a multi-dimensional array which is typically a NumPy or CuPy array, depending on whether computations are performed on the CPU or GPU. The data stored in a Variable can be input data, output predictions or any intermediate values computed during the forward pass of the network.

- Gradient Storage: During backpropagation the Chainer computes the gradients of the loss function with respect to each Variable. These gradients are stored within the Variable itself. The grad attribute of a Variable contains the gradient data which is used to update the model parameters during training.

- Automatic Differentiation: Chainer automatically constructs a computational graph as operations are applied to Variable objects. This graph tracks the sequence of operations and dependencies between variables by enabling efficient calculation of gradients during the backward pass. The backward method can be called on a Variable to trigger the computation of gradients throughout the network.

- Device Flexibility: Variable supports both CPU by using NumPy and GPU by using CuPy arrays by making it easy to move computations between devices. Operations on Variable automatically adapt to the device where the data resides.

Example

Following example shows how to use Chainer's Variable class to perform basic operations and calculate gradients via backward propagation −

import chainer

import numpy as np

# Create a Variable with data

x = chainer.Variable(np.array([1.0, 2.0, 3.0], dtype=np.float32))

# Perform operations on Variable

y = x ** 2 + 2 * x + 1

# Print the result

print("Result:", y.data) # Output: [4. 9. 16.]

# Assume y is a loss and perform backward propagation

y.grad = np.ones_like(y.data) # Set gradient of y to 1 for backward pass

y.backward() # Compute gradients

# Print the gradient of x

print("Gradient of x:", x.grad) # Output: [4. 6. 8.]

Here is the output of the chainer's variable class −

Result: [ 4. 9. 16.] Gradient of x: [4. 6. 8.]

Functions

In Chainer Functions are operations that are applied to data within a neural network. These functions are essential building blocks that perform mathematical operations, activation functions, loss computations and other transformations on the data as it flows through the network.

Chainer provides a wide range of predefined functions in the chainer.functions module by enabling users to easily build and customize neural networks.

Key functions in Chainer

Activation Functions: These functions in neural networks introduce non-linearity to the model by enabling it to learn complex patterns in the data. They are applied to the output of each layer to determine the final output of the network. Following are the activation functions in chainer −

-

ReLU (Rectified Linear Unit): The ReLU outputs are given as input directly if it's positive otherwise it outputs zero. It's widely used in neural networks because it helps mitigate the vanishing gradient problem and is computationally efficient by making it effective for training deep models. The formula for ReLU is given as −

$$ReLU(x) = max(\theta, x)$$

The function of ReLU in chainer.functions module is given as F.relu(x).

-

sigmoid: This function maps the input to a value between 0 and 1 by making it ideal for binary classification tasks. It provides a smooth gradient which helps in gradient-based optimization but can suffer from the vanishing gradient problem in deep networks. The formula for sigmoid is given as −

$$Sigmoid(x)=\frac{1}{1+e^{-x}}$$

The function for Sigmoid in chainer.functionsmodule is given as F.sigmoid(x)

-

Tanh (Hyperbolic Tangent): This function in Chainer is employed as an activation function in neural networks. It transforms the input to a value between -1 and 1 by resulting in a zero-centered output. This characteristic can be beneficial during training as it helps to address issues related to non-centered data which potentially improving the convergence of the model. The formula for Tanh is given as −

$$Tanh(x) = \frac{e^x - e^{-x}}{e^x + e^{-x}}$$

We have the function F.tanh(x) in chainer.functions module for calculating the Tanh in chainer.

-

Leaky ReLU: This is also called as Leaky Rectified Linear Unit function in neural networks is a variant of the standard ReLU activation function. Unlike ReLU which outputs zero for negative input where as Leaky ReLU permits a small, non-zero gradient for negative inputs.

This adjustment helps prevent the "dying ReLU" problem where neurons become inactive and cease to learn by ensuring that all neurons continue to contribute to the model's learning process. The formula for Leaky ReLU is given as −

$$Leaky Relu(x) = max(\alpha x, x)$$

Where, $\alpha$ is a small constant. The chainer.functions module has the function F.leaky_relu(x) to calculate Leaky ReLu in chainer.

-

Softmax: This is an activation function typically employed in the output layer of neural networks especially for multi-class classification tasks. It transforms a vector of raw prediction scores (logits) into a probability distribution where each probability is proportional to the exponential of the corresponding input value.

The probabilities in the output vector sum to 1 by making Softmax ideal for representing the likelihood of each class in a classification problem. The formula for Softmax is given as −

$$Softmax(x_{i})=\frac{e^{x_{i}}}{\sum_{j} e^{x_{j}}}$$

The chainer.functions module has the function F.softmax(x) to calculate Softmax in chainer.

Example

Here's an example which shows how to use various activation functions in Chainer within a simple neural network −

import chainer

import chainer.links as L

import chainer.functions as F

import numpy as np

# Define a simple neural network using Chainer's Chain class

class SimpleNN(chainer.Chain):

def __init__(self):

super(SimpleNN, self).__init__()

with self.init_scope():

# Define layers: two linear layers

self.l1 = L.Linear(4, 3) # Input layer with 4 features, hidden layer with 3 units

self.l2 = L.Linear(3, 2) # Hidden layer with 3 units, output layer with 2 units

def __call__(self, x):

# Forward pass using different activation functions

# Apply ReLU activation after the first layer

h = F.relu(self.l1(x))

# Apply Sigmoid activation after the second layer

y = F.sigmoid(self.l2(h))

return y

# Create a sample input data with 4 features

x = np.array([[0.5, -1.2, 3.3, 0.7]], dtype=np.float32)

# Convert input to Chainer's Variable

x_var = chainer.Variable(x)

# Instantiate the neural network

model = SimpleNN()

# Perform a forward pass

output = model(x_var)

# Print the output

print("Network output after applying ReLU and Sigmoid activations:", output.data)

Here is the output of the Activation functions used in simple neural networks −

Network output after applying ReLU and Sigmoid activations: [[0.20396319 0.7766712 ]]

Chain and ChainList

In Chainer the Chain and ChainList are fundamental classes that facilitate the organization and management of layers and parameters within a neural network. Both Chain and ChainList are derived from chainer.Link the base class responsible for defining model parameters. However they serve different purposes and are used in distinct scenarios. Let's see in detail about the chain and chainlist as follows −

Chain

The Chain class is designed to represent a neural network or a module within a network as a collection of links (layers). When using Chain we can define the network structure by explicitly specifying each layer as an instance variable. This approach is beneficial for networks with a fixed architecture.

We can use Chain when we have a well-defined, fixed network architecture where we want to directly access and organize each layer or component of the model.

Following are the key features of Chain Class −

- Named Components: Layers or links added to a Chain are accessible by name by making it straightforward to reference specific parts of the network.

- Static Architecture: The structure of a Chain is usually defined at initialization and doesn't change dynamically during training or inference.

Example

Following is the example which shows the usage of the Chain class in the Chainer Framework −

import chainer

import chainer.links as L

import chainer.functions as F

# Define a simple neural network using Chain

class SimpleChain(chainer.Chain):

def __init__(self):

super(SimpleChain, self).__init__()

with self.init_scope():

self.l1 = L.Linear(4, 3) # Linear layer with 4 inputs and 3 outputs

self.l2 = L.Linear(3, 2) # Linear layer with 3 inputs and 2 outputs

def forward(self, x):

h = F.relu(self.l1(x)) # Apply ReLU after the first layer

y = self.l2(h) # No activation after the second layer

return y

# Instantiate the model

model = SimpleChain()

print(model)

Below is the output of the above example −

SimpleChain( (l1): Linear(in_size=4, out_size=3, nobias=False), (l2): Linear(in_size=3, out_size=2, nobias=False), )

ChainList

The ChainList class is similar to Chain but instead of defining each layer as an instance variable we can store them in a list-like structure. ChainList is useful when the number of layers or components may vary or when the architecture is dynamic.

We can use the ChainList when we have a model with a variable number of layers or when the network structure can change dynamically. It's also useful for architectures like recurrent networks where the same type of layer is used multiple times.

Following are the key features of ChainList −

- Unordered Components: Layers or links added to a ChainList are accessed by their index rather than by name.

- Flexible Architecture: It is more suitable for cases where the network's structure might change or where layers are handled in a loop or list.

Example

Here is the example which shows how to use the ChainList class in the Chainer Framework −

import chainer

import chainer.links as L

import chainer.functions as F

# Define a neural network using ChainList

class SimpleChainList(chainer.ChainList):

def __init__(self):

super(SimpleChainList, self).__init__(

L.Linear(4, 3), # Linear layer with 4 inputs and 3 outputs

L.Linear(3, 2) # Linear layer with 3 inputs and 2 outputs

)

def forward(self, x):

h = F.relu(self[0](x)) # Apply ReLU after the first layer

y = self[1](h) # No activation after the second layer

return y

# Instantiate the model

model = SimpleChainList()

print(model)

Below is the output of using the ChainList class in Chainer Framework −

SimpleChainList( (0): Linear(in_size=4, out_size=3, nobias=False), (1): Linear(in_size=3, out_size=2, nobias=False), )

Optimizers

In Chainer optimizers plays a crucial role in training neural networks by adjusting the model's parameters such as weights and biases which are used to minimize the loss function.

During training, after the gradients of the loss function with respect to the parameters are calculated through back-propagation the optimizers use these gradients to update the parameters in a way that gradually reduces the loss.

Chainer offers a variety of built-in optimizers in which each employing different strategies for parameter updates to suit different types of models and tasks. Following are the key optimizers in Chainer −

SGD (Stochastic Gradient Descent)

The most basic optimizer is SGD updates in which each parameter in the direction of its negative gradient and scaled by a learning rate. It's simple but can be slow to converge.

Often these can be used in simpler or smaller models or as a baseline to compare with more complex optimizers.

The function in chainer to calculate SGD is given as chainer.optimizers.SGDExample

Here's a simple example of using Stochastic Gradient Descent (SGD) in Chainer to train a basic neural network. We'll use a small dataset which define a neural network model and then apply the SGD optimizer to update the model's parameters during training −

import chainer

import chainer.functions as F

import chainer.links as L

from chainer import Chain

import numpy as np

from chainer import Variable

from chainer import optimizers

class SimpleNN(Chain):

def __init__(self):

super(SimpleNN, self).__init__()

with self.init_scope():

self.fc1 = L.Linear(None, 100) # Fully connected layer with 100 units

self.fc2 = L.Linear(100, 10) # Output layer with 10 units (e.g., for 10 classes)

def forward(self, x):

h = F.relu(self.fc1(x)) # Apply ReLU activation function

return self.fc2(h) # Output layer

# Dummy data: 5 samples, each with 50 features

x_data = np.random.rand(5, 50).astype(np.float32)

# Dummy labels: 5 samples, each with 10 classes (one-hot encoded)

y_data = np.random.randint(0, 10, 5).astype(np.int32)

# Convert to Chainer variables

x = Variable(x_data)

y = Variable(y_data)

# Initialize the model

model = SimpleNN()

# Set up SGD optimizer with a learning rate of 0.01

optimizer = optimizers.SGD(lr=0.01)

optimizer.setup(model)

def loss_func(predictions, targets):

return F.softmax_cross_entropy(predictions, targets)

# Training loop

for epoch in range(10): # Number of epochs

# Zero the gradients

model.cleargrads()

# Forward pass

predictions = model(x)

# Calculate loss

loss = loss_func(predictions, y)

# Backward pass

loss.backward()

# Update parameters

optimizer.update()

# Print loss

print(f'Epoch {epoch + 1}, Loss: {loss.data}')

Following is the output of the SGD optimizer −

Epoch 1, Loss: 2.3100974559783936 Epoch 2, Loss: 2.233552932739258 Epoch 3, Loss: 2.1598660945892334 Epoch 4, Loss: 2.0888497829437256 Epoch 5, Loss: 2.020642042160034 Epoch 6, Loss: 1.9552147388458252 Epoch 7, Loss: 1.8926388025283813 Epoch 8, Loss: 1.8325523138046265 Epoch 9, Loss: 1.7749309539794922 Epoch 10, Loss: 1.7194255590438843

Momentum SGD

The Momentum SGDis an extension of SGD that includes momentum which helps to accelerate gradients vectors in the right directions by leading to faster converging. It accumulates a velocity vector in the direction of the gradient.

This is suitable for models where vanilla SGD struggles to converge. We have the function called chainer.optimizers.MomentumSGD to perform the Momentum SGD optimization.

Momentum Term: Adds a fraction of the previous gradient update to the current update. This helps to accelerate gradients vectors in the right directions and dampen oscillations.

Formula: The update rule for parameters with momentum is given as −

$$v_{t} = \beta v_{t-1} + (1 - \beta) \nabla L(\theta)$$ $$\theta = \theta-\alpha v_{t}$$

Where −

- $v_{t}$ is the velocity (or accumulated gradient)

- $\beta$ is the momentum coefficient (typically around 0.9)

- $\alpha$ is the learning rate

- $\nabla L(\theta)$ is the gradient of the loss function with respect to the parameters.

Example

Here's a basic example of how to use the Momentum SGD optimizer with a simple neural network in Chainer −

import chainer

import chainer.functions as F

import chainer.links as L

from chainer import Chain

from chainer import optimizers

import numpy as np

from chainer import Variable

class SimpleNN(Chain):

def __init__(self):

super(SimpleNN, self).__init__()

with self.init_scope():

self.fc1 = L.Linear(None, 100) # Fully connected layer with 100 units

self.fc2 = L.Linear(100, 10) # Output layer with 10 units (e.g., for 10 classes)

def forward(self, x):

h = F.relu(self.fc1(x)) # Apply ReLU activation function

return self.fc2(h) # Output layer

# Dummy data: 5 samples, each with 50 features

x_data = np.random.rand(5, 50).astype(np.float32)

# Dummy labels: 5 samples, each with 10 classes (one-hot encoded)

y_data = np.random.randint(0, 10, 5).astype(np.int32)

# Convert to Chainer variables

x = Variable(x_data)

y = Variable(y_data)

# Initialize the model

model = SimpleNN()

# Set up Momentum SGD optimizer with a learning rate of 0.01 and momentum of 0.9

optimizer = optimizers.MomentumSGD(lr=0.01, momentum=0.9)

optimizer.setup(model)

def loss_func(predictions, targets):

return F.softmax_cross_entropy(predictions, targets)

# Training loop

for epoch in range(10): # Number of epochs

# Zero the gradients

model.cleargrads()

# Forward pass

predictions = model(x)

# Calculate loss

loss = loss_func(predictions, y)

# Backward pass

loss.backward()

# Update parameters

optimizer.update()

# Print loss

print(f'Epoch {epoch + 1}, Loss: {loss.data}')

Following is the output of the Momentum SGD optimizer −

Epoch 1, Loss: 2.4459869861602783 Epoch 2, Loss: 2.4109833240509033 Epoch 3, Loss: 2.346194267272949 Epoch 4, Loss: 2.25825572013855 Epoch 5, Loss: 2.153470754623413 Epoch 6, Loss: 2.0379838943481445 Epoch 7, Loss: 1.9174035787582397 Epoch 8, Loss: 1.7961997985839844 Epoch 9, Loss: 1.677260398864746 Epoch 10, Loss: 1.5634090900421143

Adam

Adam optimizer combines the advantages of two other extensions of SGD namely AdaGrad, which works well with sparse gradients and RMSProp, which works well in non-stationary settings. Adam maintains a moving average of both the gradients and their squares and updates the parameters based on these averages.

This is often used as the default optimizer due to its robustness and efficiency across a wide range of tasks and models. In chainer we have the function chainer.optimizers.Adam to perform Adam optimization.

Following of the Key features of the Adam optimizer −

- Adaptive Learning Rates: Adam dynamically adjusts the learning rates for each parameter, making it effective across various tasks.

- Moments of Gradients: It calculates the first moment (mean) and second moment (uncentered variance) of gradients to improve optimization.

- Bias Correction: Adam uses bias-correction to address the bias introduced during initialization, particularly early in training.

-

Formula: The formula for Adam optimization is given as −

$$m_t = \beta_1 m_{t-1} + (1 - \beta_1) \nabla L(\theta)$$

$$v_t = \beta_2 v_{t-1} + (1 - \beta_2) (\nabla L(\theta))^2$$

$$\hat{m}_t = \frac{m_t}{1 - \beta_1^t}$$

$$\hat{v}_t = \frac{v_t}{1 - \beta_2^t}$$

$$\theta = \theta - \frac{\alpha\hat{m}_t}{\sqrt{\hat{v}_t} + \epsilon}$$

Where, $\alpha$ is the learning rate $\beta 1$ and $\beta 2$ are the decay rates for the moving averages of the gradient and its square, typically 0.9 and 0.999 respectively, ${m_t}$ and ${v_t}$ are the first and second moment estimates and $\epsilon$ is small constant added for numerical stability.

Example

Following is the example which shows how to use the Adam Optimizer in chainer with a neural network −

import chainer

import chainer.functions as F

import chainer.links as L

from chainer import Chain

from chainer import optimizers

import numpy as np

from chainer import Variable

class SimpleNN(Chain):

def __init__(self):

super(SimpleNN, self).__init__()

with self.init_scope():

self.fc1 = L.Linear(None, 100) # Fully connected layer with 100 units

self.fc2 = L.Linear(100, 10) # Output layer with 10 units (e.g., for 10 classes)

def forward(self, x):

h = F.relu(self.fc1(x)) # Apply ReLU activation function

return self.fc2(h) # Output layer

# Dummy data: 5 samples, each with 50 features

x_data = np.random.rand(5, 50).astype(np.float32)

# Dummy labels: 5 samples, each with 10 classes (one-hot encoded)

y_data = np.random.randint(0, 10, 5).astype(np.int32)

# Convert to Chainer variables

x = Variable(x_data)

y = Variable(y_data)

# Initialize the model

model = SimpleNN()

# Set up Adam optimizer with default parameters

optimizer = optimizers.Adam()

optimizer.setup(model)

def loss_func(predictions, targets):

return F.softmax_cross_entropy(predictions, targets)

# Training loop

for epoch in range(10): # Number of epochs

# Zero the gradients

model.cleargrads()

# Forward pass

predictions = model(x)

# Calculate loss

loss = loss_func(predictions, y)

# Backward pass

loss.backward()

# Update parameters

optimizer.update()

# Print loss

print(f'Epoch {epoch + 1}, Loss: {loss.data}')

Here is the output of applying the Adam optimizer to a neural network −

Epoch 1, Loss: 2.4677982330322266 Epoch 2, Loss: 2.365001678466797 Epoch 3, Loss: 2.2655398845672607 Epoch 4, Loss: 2.1715924739837646 Epoch 5, Loss: 2.082294464111328 Epoch 6, Loss: 1.9973262548446655 Epoch 7, Loss: 1.9164447784423828 Epoch 8, Loss: 1.8396313190460205 Epoch 9, Loss: 1.7676666975021362 Epoch 10, Loss: 1.7006778717041016

AdaGrad

AdaGrad is also known as Adaptive Gradient Algorithm which is an optimization algorithm that adjusts the learning rate for each parameter based on the accumulated gradient history during training. It is particularly effective for sparse data and scenarios where features vary in frequency or importance.

This is suitable for problems with sparse data and for dealing with models where some parameters require more adjustment than others. The function chainer.optimizers.AdaGrad is used to perfrom AdaGrad optimization in Chainer.

Following are the key features of the AdaGrad Optimizer −

- Adaptive Learning Rates: AdaGrad adjusts the learning rate for each parameter individually based on the cumulative sum of squared gradients. This results in larger updates for infrequent parameters and smaller updates for frequent ones.

- No Need for Learning Rate Tuning: AdaGrad automatically scales the learning rate which often removing the necessity for manual tuning.

Formula: The formula for AdaGrad is given as follows −

$$g_t = \nabla L(\theta)$$ $$G_t = G_{t-1} +{g_t}^2$$ $$\theta = \theta - \frac{\alpha}{\sqrt{G_t} + \epsilon} g_t$$Where −

- $g_t$ is the gradient at time step $t$.

- $G_t$ is the accumulated sum of the squared gradients up to time $t$.

- $\alpha$ is the global learning rate.

- $\epsilon$ is a small constant added to prevent division by zero.

Example

Here's an example of how to use the AdaGrad optimizer in Chainer with a simple neural network −

import chainer

import chainer.functions as F

import chainer.links as L

from chainer import Chain

from chainer import optimizers

import numpy as np

from chainer import Variable

class SimpleNN(Chain):

def __init__(self):

super(SimpleNN, self).__init__()

with self.init_scope():

self.fc1 = L.Linear(None, 100) # Fully connected layer with 100 units

self.fc2 = L.Linear(100, 10) # Output layer with 10 units (e.g., for 10 classes)

def forward(self, x):

h = F.relu(self.fc1(x)) # Apply ReLU activation function

return self.fc2(h) # Output layer

# Dummy data: 5 samples, each with 50 features

x_data = np.random.rand(5, 50).astype(np.float32)

# Dummy labels: 5 samples, each with 10 classes (one-hot encoded)

y_data = np.random.randint(0, 10, 5).astype(np.int32)

# Convert to Chainer variables

x = Variable(x_data)

y = Variable(y_data)

# Initialize the model

model = SimpleNN()

# Set up AdaGrad optimizer with a learning rate of 0.01

optimizer = optimizers.AdaGrad(lr=0.01)

optimizer.setup(model)

def loss_func(predictions, targets):

return F.softmax_cross_entropy(predictions, targets)

# Training loop

for epoch in range(10): # Number of epochs

# Zero the gradients

model.cleargrads()

# Forward pass

predictions = model(x)

# Calculate loss

loss = loss_func(predictions, y)

# Backward pass

loss.backward()

# Update parameters

optimizer.update()

# Print loss

print(f'Epoch {epoch + 1}, Loss: {loss.data}')

Here is the output of applying the AdaGrad optimizer to a neural network −

Epoch 1, Loss: 2.2596702575683594 Epoch 2, Loss: 1.7732301950454712 Epoch 3, Loss: 1.4647505283355713 Epoch 4, Loss: 1.2398217916488647 Epoch 5, Loss: 1.0716438293457031 Epoch 6, Loss: 0.9412426352500916 Epoch 7, Loss: 0.8350374102592468 Epoch 8, Loss: 0.7446572780609131 Epoch 9, Loss: 0.6654194593429565 Epoch 10, Loss: 0.59764164686203

RMSProp

RMSProp optimizer is improved upon AdaGrad by introducing a decay factor to the sum of squared gradients by preventing the learning rate from shrinking too much. It's particularly effective in recurrent neural networks or models that require quick adaptation to varying gradient scales.

In Chainer to perform RMSProp optimizer we have the function chainer.optimizers.RMSprop.

Following are the key features of RMSProp optimizer −

- Decay Factor: RMSProp introduces a decay factor to the accumulated sum of squared gradients by preventing the learning rate from becoming too small and allowing for a more stable convergence.

- Adaptive Learning Rate: Like AdaGrad the RMSProp optimizer adapts the learning rate for each parameter individually based on the gradient history but it avoids the diminishing learning rate problem by limiting the accumulation of past squared gradients.

Formula: The formula for RMSProp optimizer is given as −

$$g_t = \nabla L(\theta)$$ $$E[g^2]_t = \gamma E[g^2]_{t-1} + (1 - \gamma){g_t}^2$$ $$\theta = \theta - \frac{\alpha}{\sqrt{E[g^2]_t} + \epsilon} g_t$$

Where −

- $g_t$ is the gradient at time step $t$.

- $E[g_2]$ is the moving average of the squared gradients.

- $\gamma$ is the decay factor which is typically around 0.9.

- $\alpha$ is the global learning rate.

- $\epsilon$ is a small constant added to prevent division by zero.

Example

Below is the example which shows how we can use the RMSProp optimizer in Chainer with a simple neural network −

import chainer

import chainer.functions as F

import chainer.links as L

from chainer import Chain

import numpy as np

from chainer import Variable

from chainer import optimizers

class SimpleNN(Chain):

def __init__(self):

super(SimpleNN, self).__init__()

with self.init_scope():

self.fc1 = L.Linear(None, 100) # Fully connected layer with 100 units

self.fc2 = L.Linear(100, 10) # Output layer with 10 units (e.g., for 10 classes)

def forward(self, x):

h = F.relu(self.fc1(x)) # Apply ReLU activation function

return self.fc2(h) # Output layer

# Dummy data: 5 samples, each with 50 features

x_data = np.random.rand(5, 50).astype(np.float32)

# Dummy labels: 5 samples, each with 10 classes (one-hot encoded)

y_data = np.random.randint(0, 10, 5).astype(np.int32)

# Convert to Chainer variables

x = Variable(x_data)

y = Variable(y_data)

# Initialize the model

model = SimpleNN()

# Set up RMSProp optimizer with a learning rate of 0.01 and decay factor of 0.9

optimizer = optimizers.RMSprop(lr=0.01, alpha=0.9)

optimizer.setup(model)

def loss_func(predictions, targets):

return F.softmax_cross_entropy(predictions, targets)

# Training loop

for epoch in range(10): # Number of epochs

# Zero the gradients

model.cleargrads()

# Forward pass

predictions = model(x)

# Calculate loss

loss = loss_func(predictions, y)

# Backward pass

loss.backward()

# Update parameters

optimizer.update()

# Print loss

print(f'Epoch {epoch + 1}, Loss: {loss.data}')

Following is the output of the above example of using the RMSProp optimization −

Epoch 1, Loss: 2.3203792572021484 Epoch 2, Loss: 1.1593462228775024 Epoch 3, Loss: 1.2626817226409912 Epoch 4, Loss: 0.6015896201133728 Epoch 5, Loss: 0.3906801640987396 Epoch 6, Loss: 0.28964582085609436 Epoch 7, Loss: 0.21569299697875977 Epoch 8, Loss: 0.15832018852233887 Epoch 9, Loss: 0.12146510928869247 Epoch 10, Loss: 0.09462013095617294

Datasets and Iterators in Chainer

In Chainer handling data efficiently is crucial for training neural networks. To facilitate this the chainer framework provides two essential components namely,Datasets and Iterators. These components help in managing data by ensuring that it is fed into the model in a structured and efficient manner.

Datasets

A dataset in Chainer is a collection of data samples that can be fed into a neural network for training, validation or testing. Chainer provides a Dataset class that can be extended to create custom datasets as well as several built-in dataset classes for common tasks.

Types of Datasets in Chainer

Chainer provides several types of datasets to handle various data formats and structures. These datasets can be broadly categorized into built-in datasets, custom datasets and dataset transformations.

Built-in Datasets

Chainer comes with a few popular datasets that are commonly used for benchmarking and experimentation. These datasets are readily available and can be loaded easily using built-in functions.

Following is the code to get the list of all available datasets in Chainer −

import chainer.datasets as datasets

# Get all attributes in the datasets module

all_datasets = [attr for attr in dir(datasets) if attr.startswith('get_')]

# Print the available datasets

print("Built-in datasets available in Chainer:")

for dataset in all_datasets:

print(f"- {dataset}")

Here is the output which displays all the built-in datasets in Chainer Framework −

Built-in datasets available in Chainer: - get_cifar10 - get_cifar100 - get_cross_validation_datasets - get_cross_validation_datasets_random - get_fashion_mnist - get_fashion_mnist_labels - get_kuzushiji_mnist - get_kuzushiji_mnist_labels - get_mnist - get_ptb_words - get_ptb_words_vocabulary - get_svhn

Custom Datasets

When working with custom data we can create our own datasets by subclassing chainer.dataset.DatasetMixin. This allows us to define how data should be loaded and returned.

Here is the example of creating the custom datasets using chainer.dataset.DatasetMixin and printing the first row in it −

import chainer

import numpy as np

class MyDataset(chainer.dataset.DatasetMixin):

def __init__(self, data, labels):

self.data = data

self.labels = labels

def __len__(self):

return len(self.data)

def get_example(self, i):

return self.data[i], self.labels[i]

# Creating a custom dataset

data = np.random.rand(100, 3)

labels = np.random.randint(0, 2, 100)

dataset = MyDataset(data, labels)

print(dataset[0])

Here is the output of the custom dataset first row −

(array([0.82744124, 0.33828446, 0.06409377]), 0)

Preprocessed Datasets

Chainer provides tools to apply transformations to datasets such as scaling, normalization or data augmentation. These transformations can be applied on-the-fly using TransformDataset.

Here is the example of using the Preprocessed Datasets in chainer −

from chainer.datasets import TransformDataset def transform(data): x, t = data x = x / 255.0 # Normalize input data return x, t # Apply transformation to dataset transformed_dataset = TransformDataset(dataset, transform) print(transformed_dataset[0])

Below is the first row of the preprocessed Datasets with the help of TransformDataset() function −

(array([0.00324487, 0.00132661, 0.00025135]), 0)

Concatenated Datasets

ConcatDataset is used to concatenate multiple datasets into a single dataset. This is useful when we have data spread across different sources. Here is the example of using the concatenated Datasets in chainer Framework in which prints out each sample's data and label from the concatenated dataset. The combined dataset includes all samples from both dataset1 and dataset2 −

import numpy as np

from chainer.datasets import ConcatenatedDataset

from chainer.dataset import DatasetMixin

# Define a custom dataset class

class MyDataset(DatasetMixin):

def __init__(self, data, labels):

self.data = data

self.labels = labels

def __len__(self):

return len(self.data)

def get_example(self, i):

return self.data[i], self.labels[i]

# Sample data arrays

data1 = np.random.rand(5, 3) # 5 samples, 3 features each

labels1 = np.random.randint(0, 2, 5) # Binary labels for data1

data2 = np.random.rand(5, 3) # Another 5 samples, 3 features each

labels2 = np.random.randint(0, 2, 5) # Binary labels for data2

# Create MyDataset instances

dataset1 = MyDataset(data1, labels1)

dataset2 = MyDataset(data2, labels2)

# Concatenate the datasets

combined_dataset = ConcatenatedDataset(dataset1, dataset2)

# Iterate over the combined dataset and print each example

for i in range(len(combined_dataset)):

data, label = combined_dataset[i]

print(f"Sample {i+1}: Data = {data}, Label = {label}")

Here is the output of the concatenated datasets in Chainer −

Sample 1: Data = [0.6153635 0.19185915 0.26029754], Label = 1 Sample 2: Data = [0.69201927 0.70393578 0.85382294], Label = 1 Sample 3: Data = [0.46647242 0.37787839 0.37249345], Label = 0 Sample 4: Data = [0.2975833 0.90399536 0.15978975], Label = 1 Sample 5: Data = [0.29939455 0.21290926 0.97327959], Label = 1 Sample 6: Data = [0.68297438 0.64874375 0.09129224], Label = 1 Sample 7: Data = [0.52026288 0.24197601 0.5239313 ], Label = 0 Sample 8: Data = [0.63250008 0.85023346 0.94985447], Label = 1 Sample 9: Data = [0.75183151 0.01774763 0.66343944], Label = 0 Sample 10: Data = [0.60212864 0.48215319 0.02736618], Label = 0

Tuple and Dict Datasets

Chainer provides special dataset classes called TupleDataset and DictDataset that allow us to manage multiple data sources conveniently. These classes are useful when we have more than one type of data such as features and labels or multiple feature sets that we want to handle together.

- Tuple Datasets: This is used to combine multiple datasets or data arrays into a single dataset where each element is a tuple of corresponding elements from the original datasets.

Here is the example which shows how to use the Tuple Datasets in Neural networks −

import numpy as np

from chainer.datasets import TupleDataset

# Create two datasets (or data arrays)

data1 = np.random.rand(100, 3) # 100 samples, 3 features each

data2 = np.random.rand(100, 5) # 100 samples, 5 features each

# Create a TupleDataset combining both data arrays

tuple_dataset = TupleDataset(data1, data2)

# Accessing data from the TupleDataset

for i in range(5):

print(f"Sample {i+1}: Data1 = {tuple_dataset[i][0]}, Data2 = {tuple_dataset[i][1]}")

Here is the output of the Tuple Datasets −

Sample 1: Data1 = [0.32992823 0.57362303 0.95586597], Data2 = [0.41455 0.52850591 0.55602243 0.36316931 0.93588697] Sample 2: Data1 = [0.37731994 0.00452533 0.67853069], Data2 = [0.71637691 0.04191565 0.54027323 0.68738626 0.01887967] Sample 3: Data1 = [0.85808665 0.15863516 0.51649116], Data2 = [0.9596284 0.12417238 0.22897152 0.63822924 0.99434029] Sample 4: Data1 = [0.2477932 0.27937585 0.59660463], Data2 = [0.92666318 0.93611279 0.96622103 0.41834484 0.72602107] Sample 5: Data1 = [0.71989544 0.46155552 0.31835487], Data2 = [0.27475741 0.33759694 0.22539997 0.40985004 0.00469414]

Here is the example which shows how to use the Dict Datasets in chainer −

import numpy as np

from chainer.datasets import DictDataset

# Create two datasets (or data arrays)

data1 = np.random.rand(100, 3) # 100 samples, 3 features each

labels = np.random.randint(0, 2, 100) # Binary labels for each sample

# Create a DictDataset

dict_dataset = DictDataset(data=data1, label=labels)

# Accessing data from the DictDataset

for i in range(5):

print(f"Sample {i+1}: Data = {dict_dataset[i]['data']}, Label = {dict_dataset[i]['label']}")

Here is the output of the Tuple Datasets −

Sample 1: Data = [0.09362018 0.33198328 0.11421714], Label = 1 Sample 2: Data = [0.53655817 0.9115115 0.0192754 ], Label = 0 Sample 3: Data = [0.48746879 0.18567869 0.88030764], Label = 0 Sample 4: Data = [0.10720832 0.79523399 0.56056922], Label = 0 Sample 5: Data = [0.76360577 0.69915416 0.64604595], Label = 1

Iterators

In Chainer iterators are crucial for managing data during the training of machine learning models. They break down large datasets into smaller chunks known as minibatches which can be processed incrementally. This approach enhances memory efficiency and speeds up the training process by allowing the model to update its parameters more frequently.

Types of Iterators in Chainer

Chainer provides various types of iterators to handle datasets during the training and evaluation of machine learning models. These iterators are designed to work with different scenarios and requirements such as handling large datasets, parallel data loading or ensuring data shuffling for better generalization.

SerialIterator