ONNX - Quick Guide

ONNX - Introduction

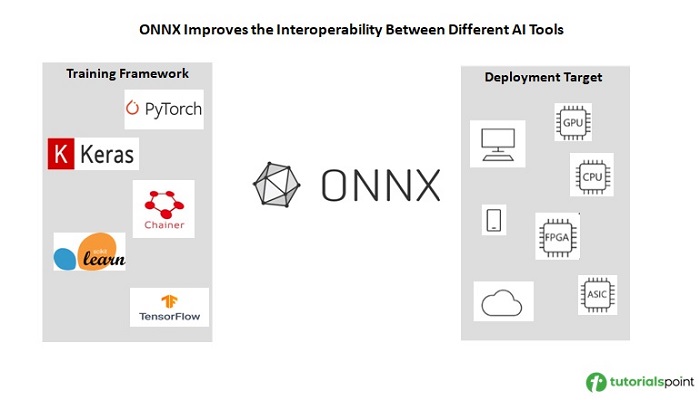

ONNX (Open Neural Network Exchange) is an open-source format designed to represent machine learning models. Its main goal is to make it easier for developers to move models between different machine learning frameworks, ensuring compatibility and flexibility.

By providing a standardized format, ONNX allows developers to optimize their workflows, leverage various tools, and improve model interoperability.

The ONNX format supports a wide variety of operators, which are the fundamental building blocks of machine learning models. This broad support makes it easier to represent complex models and convert them between different frameworks, such as TensorFlow, PyTorch, and scikit-learn.

ONNX is widely adopted across the AI community and has become a key player in enhancing the efficiency and portability of machine learning models. At a high level, ONNX usage involves the following steps −

- Train your model using any popular framework, such as PyTorch, TensorFlow, or scikit-learn.

- Convert the model to the ONNX format, ensuring compatibility across different platforms.

- Load and run the model in ONNX Runtime for optimized inference.

This process ensures that machine learning models are portable, efficient, and ready for deployment across various environments. By using ONNX, developers can optimize workflows and ensure smooth integration of models across multiple frameworks.

What is Interoperability?

Interoperability in machine learning refers to the ability of different systems, tools, and frameworks to work together seamlessly. In the context of ONNX, interoperability means that a model trained in one machine learning framework can be used, modified, or deployed in another framework without the need for extensive adjustments.

History and Development of ONNX

ONNX was originally developed by the PyTorch team at Facebook under the name "Toffee". In September 2017, Facebook and Microsoft re-branded the project as ONNX and officially announced it.

The goal was to create an open standard for representing machine learning models that would foster greater collaboration and innovation. ONNX received broad support from major tech companies, including IBM, Huawei, Intel, AMD, Arm, and Qualcomm.

Key Features of ONNX

ONNX offers several key features and benefits that make it an attractive choice for AI developers −

- Standardization: ONNX provides a standardized format for machine learning models, making it easier to move models between different frameworks.

- Interoperability: With ONNX, models can be trained in one framework and then used in another, enhancing flexibility in model development and deployment. This interoperability is crucial for developers who want to experiment with different tools without being tied to a single ecosystem.

- Operators: ONNX supports a wide range of operators, allowing it to represent complex models accurately.

- ONNX Runtime: ONNX includes a high-performance runtime that can optimize and execute models across various hardware platforms, from powerful GPUs to small edge devices. This ensures that models run efficiently, regardless of the deployment environment.

- Community: ONNX is managed by a strong community of developers and major tech companies, ensuring continuous development and innovation. So that ONNX is regularly updated to include new features and improvements.

ONNX - Environment Setup

Setting up an environment to work with ONNX is essential for creating, converting, and deploying machine learning models. In this tutorial we will learn about installing ONNX, its dependencies, and setting up ONNX Runtime for efficient model inference.

The ONNX environment setup involves installing the ONNX Runtime, its dependencies, and the required tools to convert and run machine learning models in ONNX format.

Setting Up ONNX for Python

Python is the most commonly used language for ONNX development. To set up the ONNX environment in Python, you need to install ONNX and model exporting libraries for popular frameworks like PyTorch, TensorFlow, and Scikit-learn. ONNX is required to convert and export models to the ONNX format.

pip install onnx

Installing ONNX Runtime

ONNX Runtime is the primary tool for running models in ONNX format. It is available for both CPU and GPU (CUDA and ROCm) environments.

Installing ONNX Runtime for CPU

To install the CPU version of ONNX Runtime, simply run the following command in your terminal −

pip install onnxruntime

This installs the basic ONNX Runtime package for CPU execution.

Installing ONNX Runtime for GPU

If you want to utilize GPU acceleration, ONNX Runtime provides support for both CUDA (NVIDIA) and ROCm (AMD) platforms. The default CUDA version supported by ONNX Runtime is 11.8.

pip install onnxruntime-gpu

This installs the ONNX Runtime for CUDA 11.x

Install Model Exporting Libraries

Depending on the framework you're working with, install the corresponding library for converting models.

-

PyTorch: ONNX support is built into PyTorch. Following is the command.

pip install torch

-

TensorFlow: Install tf2onnx to convert TensorFlow models.

pip install tf2onnx

-

Scikit-learn: Use skl2onnx to export models from Scikit-learn.

pip install skl2onnx

Setting Up ONNX for Other Languages

C#/C++/WinML

For C# and C++ projects, ONNX Runtime offers native support for Windows ML (WinML) and GPU acceleration. We can install ONNX Runtime for CPU in C# using the following −

dotnet add package Microsoft.ML.OnnxRuntime

Similarly, use the following to install ONNX Runtime for GPU (CUDA) −

dotnet add package Microsoft.ML.OnnxRuntime.Gpu

JavaScript

ONNX Runtime is also available for JavaScript in both browser and Node.js environments. Following is the command to install ONNX Runtime for browsers −

npm install onnxruntime-web

Similarly, to install ONNX Runtime for Node.js use the following −

npm install onnxruntime-node

Setting Up ONNX for Mobile (iOS/Android)

ONNX Runtime can be set up for mobile platforms, including iOS and Android.

-

iOS: Add ONNX Runtime to your Podfile and run pod install −

pod 'onnxruntime-c'

-

Android: In your Android Studio project, add ONNX Runtime to your build.gradle file −

dependencies { implementation 'com.microsoft.onnxruntime :onnxruntime-android:latest.release' }

ONNX - Runtime

ONNX Runtime, is a high-performance engine designed to efficiently run ONNX models. It is a tool that helps run machine learning models faster and more efficiently. It works on different platforms like Windows, Mac, and Linux, and can use various types of hardware, such as CPUs and GPUs, to speed up the models.

ONNX Runtime supports models from popular frameworks like PyTorch, TensorFlow, and scikit-learn, making it easy to move models between different environments.

Optimizing Inference with ONNX Runtime

Inference is a process of using a trained model to make predictions by analyzing live data, which means making predictions or decisions based on a trained machine learning model. It powers many well-known Microsoft products, like Office and Azure, and is also used in many community projects.

ONNX Runtime is particularly good at speeding up this process by optimizing how the model runs. Following are the examples of how ONNX runtime is used −

- Graph Optimizations: ONNX Runtime improves the model by making changes to the structure of the computation graph, which is how the model processes data. This helps the model run more efficiently.

- Faster Predictions: ONNX Runtime can make your model predictions quicker by optimizing how the model runs.

- Run on Different Platforms: You can train your model in Python and then use it in apps written in C#, C++, or Java.

- Switch Between Frameworks: With ONNX Runtime, you can train your model in one framework and use it in another without much extra work.

- Execution Providers: ONNX Runtime can work with different types of hardware through its flexible Execution Providers (EP) framework. Execution Providers are specialized components within ONNX Runtime that allow the model to take full advantage of the specific hardware it's running on.

How ONNX Runtime Works

The process of using ONNX Runtime is straightforward and consists of three main steps −

- Get a Model: The first step is to get a machine learning model that has been trained using any framework that supports export or conversion to the ONNX format. Popular frameworks like PyTorch, TensorFlow, and scikit-learn offer tools for exporting models to ONNX.

- Load and Run the Model: Once you have the ONNX model, you can load it into ONNX Runtime and execute it. This step is straightforward, and there are tutorials available for running models in different programming languages such as Python, C#, and C++.

- Improve Performance(optional): ONNX Runtime allows for performance tuning using various runtime configurations and hardware accelerators.

Integration with Different Platforms

One of the biggest strengths of ONNX Runtime is its ability to integrate with a wide variety of platforms and environments. This flexibility makes it a valuable tool for developers who need to deploy models across different systems.

Running ONNX Runtime on Different Hardware

ONNX Runtime supports a broad range of hardware, from powerful servers with GPUs to smaller edge devices like the Nvidia, Jetson, Nano. This allows developers to deploy their models wherever they are needed, without worrying about compatibility issues.

Programming Language Support

ONNX Runtime provides APIs for several popular programming languages, making it easy to integrate into various applications −

- Python

- C++

- C#

- Java

- JavaScript

Cross-Platform Compatibility

ONNX Runtime is truly cross-platform, working seamlessly on Windows, Mac, and Linux operating systems. It also supports ARM devices, which are commonly used in mobile and embedded systems.

Example: Simple ONNX Runtime API Example

Here's a basic example of how to use ONNX Runtime in Python.

import onnxruntime

# Load the ONNX model

session = onnxruntime.InferenceSession("mymodel.onnx")

# Run the model with input data

results = session.run([], {"input": input_data})

In this example, the InferenceSession is used to load the ONNX model, and the run method is called to perform inference with the provided input data. The output is stored in the results variable, which can then be used for further processing or analysis.

ONNX - Ecosystem

The ONNX (Open Neural Network Exchange) ecosystem is a collection of tools, platforms, and services designed to facilitate the development, deployment, and optimization of machine learning models using ONNX as a standard format. ONNX provides an open format for representing machine learning models, enabling interoperability between different frameworks and tools.

In general terms, an ecosystem refers to a complex network or interconnected system of components that interact with each other within a particular environment. The ONNX ecosystem is designed to enhance interoperability, optimize performance, and simplify the deployment of machine learning models across various environments and applications.

Key Components of the ONNX Ecosystem

Following are the key components of the ONNX Ecosystem −

ONNX Runtime

ONNX Runtime, is a high-performance engine designed to efficiently run ONNX models. It is a tool that helps run machine learning models faster and more efficiently. ONNX Runtime supports models from popular frameworks like PyTorch, TensorFlow, and scikit-learn, making it easy to move models between different environments.

Model Conversion and Export Tools

ONNX provides various tools available to work with −

- ONNX Exporters: Tools that convert models from popular frameworks (like PyTorch, TensorFlow, and scikit-learn) into the ONNX format, allowing for model interoperability and deployment.

- ONNX Importers: Tools that enable the import of ONNX models into different frameworks or environments for further processing or deployment.

Integration Platforms

We can integrate ONNX with various platforms some of them are listed below −

- Azure Machine Learning: Provides services for training, deploying, and managing ONNX models in the cloud, integrating with various Azure services for enhanced scalability and performance.

- Azure Custom Vision: Allows users to export custom vision models to ONNX format, making them ready for deployment across different platforms.

- Azure SQL Edge: Supports machine learning predictions using ONNX models on edge devices, enabling inferring machine learning models in Azure SQL Edge.

- Azure Synapse Analytics: Integrates ONNX models within Synapse SQL.

Inference Servers

NVIDIA Triton Inference Server: A server that supports ONNX Runtime as a back end, enabling efficient and scalable model inference on NVIDIA GPUs. Triton provides high-performance inferencing and supports multiple model formats, including ONNX.

Automated Machine Learning

ML.NET: This is an open-source, cross-platform framework for building machine learning models in .NET ecosystem. ML.NET supports ONNX models for inference, allowing .NET developers to integrate advanced ML capabilities into their applications.

It is an Automated ML (AutoML) Open Neural Network Exchange (ONNX) model that makes predictions in a C# console application with ML.NET.

ONNX - Data Types

In ONNX (Open Neural Network Exchange), the data types used in models are a crucial aspect of model representation and computation. As a standard format for machine learning models, ONNX supports a range of data types that allow for interoperability between different machine learning frameworks.

In this tutorial, we will explore the various ONNX data types, including tensor types, element types, sparse tensors, and non-tensor types like sequences and maps.

Understanding Tensors in ONNX

ONNX primarily focuses on numerical computation involving tensors, which are multidimensional arrays. Tensors are used to represent inputs, outputs, and intermediate values in ONNX models.

Each tensor is defined by three key components −

- Element type: Specifies the data type of all elements in the tensor.

- Shape: An array describing the dimensions of the tensor. Shapes can be fixed or dynamic, and a tensor can have an empty shape (e.g., a scalar).

- Contiguous array: A fully populated array of data values.

This design optimizes ONNX for numerical computations in deep learning applications, where large, multidimensional arrays are common.

Supported Element Types

Initially, ONNX was designed to support deep learning models, which often use floating-point numbers(32-bit floats). However, the current version of ONNX supports a wide range of element types, allowing for flexibility across different machine learning and data processing tasks.

Below is a list of supported data types in ONNX −

| Element Type | Description |

|---|---|

| FLOAT | 32-bit floating point |

| UINT8 | 8-bit unsigned integer |

| INT8 | 8-bit signed integer |

| UINT16 | 16-bit unsigned integer |

| INT16 | 16-bit signed integer |

| INT32 | 32-bit signed integer |

| INT64 | 64-bit signed integer |

| STRING | String data type |

| BOOL | Boolean type |

| FLOAT16 | 16-bit floating point |

| DOUBLE | 64-bit floating point |

| UINT32 | 32-bit unsigned integer |

| UINT64 | 64-bit unsigned integer |

| COMPLEX64 | 64-bit complex number |

| COMPLEX128 | 128-bit complex number |

| BFLOAT16 | Brain floating point 16-bit format |

| FLOAT8E4M3FN | 8-bit floating point (format E4M3FN) |

| FLOAT8E4M3FNUZ | 8-bit floating point (format E4M3FNUZ) |

| FLOAT8E5M2 | 8-bit floating point (format E5M2) |

| FLOAT8E5M2FNUZ | 8-bit floating point (format E5M2FNUZ) |

| UINT4 | 4-bit unsigned integer |

| INT4 | 4-bit signed integer |

| FLOAT4E2M1 | 4-bit floating point (format E2M1) |

Sparse Tensors

Sparse tensors are useful when working with data that contains a large number of zeros. ONNX supports sparse tensors, particularly 2D sparse tensors. These are represented by the class SparseTensorProto, which includes the following attributes −

- dims: Specifies the shape of the sparse tensor.

- indices: The positions of non-zero values in the tensor (stored as int64).

- values: The actual non-zero values.

Non-Tensor Data Types

In addition to tensors, ONNX also supports non-tensor data types such as −

- Sequence: A sequence of tensors. This is useful for operations that need to handle a list or collection of tensors.

- Map: A mapping of tensor values, often used for associative arrays or dictionaries.

These non-tensor types are more commonly used in classical machine learning tasks, where structures like sequences and maps are necessary to represent certain operations.

ONNX - File Format

The ONNX file format is basically a container that encapsulates the entire structure of a machine learning model. When you export a model to ONNX format, the resulting file (usually with a .onnx extension) contains a graph-based representation of the model. If you have ever worked with deep learning architectures like ImageNet, MobileNet, or AlexNet, you will find ONNX representations quite familiar.

The ONNX file format is a flexible way to represent machine learning models, making them portable across various frameworks. At its core, an ONNX model is composed of a graph, a collection of computation nodes. Each node in the graph represents a specific operation (e.g., convolution, ReLU activation), and the data moves through these nodes in a sequence, just like the flow of data through layers in a neural network.

In this tutorial, we will learn about the ONNX file format in detail, discussing how it structures a model, including its components like the computational graph, nodes, operators, and metadata.

Key Components of the ONNX File Format

An ONNX file contains various components that help describe the model, its structure, and how it works. Let's discuss these components.

Model

The ONNX file represents a model and includes key elements such as −

- Version Info: Details about the ONNX version used.

- Metadata: Additional information about the model, such as author or framework used.

- Acyclic Computation Data-flow Graph: The core structure that describes how the computations are performed within the model.

Graph

The heart of the ONNX file is the graph that represents the flow of computations. Think of it like a map of the operations your model goes through to process inputs and produce outputs.

- Inputs and Outputs: Describes the data that enters and leaves the model. It includes information like the data type and shape (dimensions).

- List of Computation Nodes: Each node in the graph represents a computation step (e.g., applying an activation function).

- Graph Name: The name of the graph that describes the overall structure of the model.

Computation Nodes

Each computation node performs a specific task, such as applying a function to the input data.

- Inputs: Every node may have zero or more inputs of predefined types, which are the outputs of other nodes or external inputs.

- Outputs: Each node produces one or more outputs that are passed to the next computation node in the sequence.

- Operators: These represent the operations being applied (e.g., matrix multiplication, ReLU activation).

- Operator Parameters: Each operator may have certain parameters like learning rate or normalization factors.

Understanding the Graph Structure

The structure of the ONNX graph is a series of interconnected computation nodes, with data flowing between them. Think of it like the layers of a neural network, where each layer processes the input data and passes it on to the next layer.

- Graph Inputs: These are the starting points for the data, where the input is defined (e.g., the image tensor in a CNN).

- Graph Outputs: After the data has passed through all the computation nodes, the final output tensor is produced (e.g., the classification label).

- Computation Nodes: These represent individual operations in the model, such as convolutions, activations (ReLU), pooling, and fully connected layers.

ONNX - Operators

Operators in ONNX are the building blocks that define computations in a machine learning model, mapping operations from various frameworks (like TensorFlow, PyTorch, etc.) into a standardized ONNX format.

In this tutorial, well explore what ONNX operators are, the different types, and how they function in ONNX-compatible models.

What are ONNX Operators?

An ONNX operator is a fundamental unit of computation used in an ONNX model. Each operator defines a specific type of operation, such as mathematical computations, data processing, or neural network layers. Operators are identified by a tuple −

<name, domain, version>

Where,

- name: The name of the operator.

- domain: The namespace to which the operator belongs.

- version: The version of the operator (to track updates and changes).

Core Operators in ONNX

Core operators are the standard set of operators that come with ONNX and ONNX-ML. These operators are highly optimized and supported by any ONNX-compatible product. These operators are designed to cover most common machine learning tasks and cannot generally be meaningfully further decomposed into simpler operations.

Key Features of Core Operators −

- These are standard operators defined within the ONNX framework.

- The ai.onnx domain contains 124 operators, while the ai.onnx.ml domain (focused on machine learning tasks) contains 19 operators.

- Core operators support various problem areas such as image classification, recommendation systems, and natural language processing.

The ai.onnx Domain Operators

Following are the list of ai.onnx operators −

| S.No | Operator |

|---|---|

| 1 | Abs |

| 2 | Acos |

| 3 | Acosh |

| 4 | Add |

| 5 | AffineGrid |

| 6 | And |

| 7 | ArgMax |

| 8 | ArgMin |

| 9 | Asin |

| 10 | Asinh |

| 11 | Atan |

| 12 | Atanh |

| 13 | AveragePool |

| 14 | BatchNormalization |

| 15 | Bernoulli |

| 16 | BitShift |

| 17 | BitwiseAnd |

| 18 | BitwiseNot |

| 19 | BitwiseOr |

| 20 | BitwiseXor |

| 21 | BlackmanWindow |

| 22 | Cast |

| 23 | CastLike |

| 24 | Ceil |

| 25 | Celu |

| 26 | CenterCropPad |

| 27 | Clip |

| 28 | Col2Im |

| 29 | Compress |

| 30 | Concat |

| 31 | ConcatFromSequence |

| 32 | Constant |

| 33 | ConstantOfShape |

| 34 | Conv |

| 35 | ConvInteger |

| 36 | ConvTranspose |

| 37 | Cos |

| 38 | Cosh |

| 39 | CumSum |

| 40 | DFT |

| 41 | DeformConv |

| 42 | DepthToSpace |

| 43 | DequantizeLinear |

| 44 | Det |

| 45 | Div |

| 46 | Dropout |

| 47 | DynamicQuantizeLinear |

| 48 | Einsum |

| 49 | Elu |

| 50 | Equal |

| 51 | Erf |

| 52 | Exp |

| 53 | Expand |

| 54 | EyeLike |

| 55 | Flatten |

| 56 | Floor |

| 57 | GRU |

| 58 | Gather |

| 59 | GatherElements |

| 60 | GatherND |

| 61 | Gelu |

| 62 | Gemm |

| 63 | GlobalAveragePool |

| 64 | GlobalLpPool |

| 65 | GlobalMaxPool |

| 66 | Greater |

| 67 | GreaterOrEqual |

| 68 | GridSample |

| 69 | GroupNormalization |

| 70 | HammingWindow |

| 71 | HannWindow |

| 72 | HardSigmoid |

| 73 | HardSwish |

| 74 | Hardmax |

| 75 | Identity |

| 76 | If |

| 77 | ImageDecoder |

| 78 | InstanceNormalization |

| 79 | IsInf |

| 80 | IsNaN |

| 81 | LRN |

| 82 | LSTM |

| 83 | LayerNormalization |

| 84 | LeakyRelu |

| 85 | Less |

| 86 | LessOrEqual |

| 87 | Log |

| 88 | LogSoftmax |

| 89 | Loop |

| 90 | LpNormalization |

| 91 | LpPool |

| 92 | MatMul |

| 93 | MatMulInteger |

| 94 | Max |

| 95 | MaxPool |

| 96 | MaxRoiPool |

| 97 | MaxUnpool |

| 98 | Mean |

| 99 | MeanVarianceNormalization |

| 100 | MelWeightMatrix |

| 101 | Min |

| 102 | Mish |

| 103 | Mod |

| 104 | Mul |

| 105 | Multinomial |

| 106 | Neg |

| 107 | NonMaxSuppression |

| 108 | NonZero |

| 109 | Not |

| 110 | OneHot |

| 111 | Optional |

| 112 | Or |

| 113 | PRelu |

| 114 | Pad |

| 115 | Pow |

| 116 | QLinearAdd |

| 117 | QLinearAveragePool |

| 118 | QLinearConcat |

| 119 | QLinearConv |

| 120 | QLinearLeakyRelu |

| 121 | QLinearMul |

| 122 | QLinearSigmoid |

| 123 | QLinearSoftmax |

| 124 | QLinearTranspose |

The ai.onnx.ml Domain Operators

Below are the list of all available operators in the ai.onnx.ml domain.

| S.No | Operator |

|---|---|

| 1 | ArrayFeatureExtractor |

| 2 | Binarizer |

| 3 | CastMap |

| 4 | CategoryMapper |

| 5 | DictVectorizer |

| 6 | FeatureVectorizer |

| 7 | Imputer |

| 8 | LabelEncoder |

| 9 | LinearClassifier |

| 10 | LinearRegressor |

| 11 | Normalizer |

| 12 | OneHotEncoder |

| 13 | SVMClassifier |

| 14 | SVMRegressor |

| 15 | Scaler |

| 16 | TreeEnsemble |

| 17 | TreeEnsembleClassifier |

| 18 | TreeEnsembleRegressor |

| 19 | ZipMap |

Custom Operators in ONNX

In addition to core operators, ONNX allows developers to define custom operators for more specialized or non-standard tasks.

- If a particular operation does not exist in the ONNX operator set, or if a developer creates a new technique or custom activation function, they can define a custom operator.

- Custom operators are identified by a custom domain name, distinguishing them from core operators.

ONNX - Design Principles

ONNX (Open Neural Network Exchange) is a powerful and flexible framework that enables interoperability between various machine learning and deep learning frameworks.

It facilitates the seamless transfer of models across different platforms, ensuring that models trained in one environment can be used for inference in another. In this tutorial, we will learn about the key design principles of ONNX.

Support for Both DL and Traditional ML

ONNX is designed to support deep learning models and traditional machine learning algorithms. Initially, ONNX was focused on deep learning, but as its ecosystem grew with contributions from diverse companies and organizations expanded, ONNX began to include support for traditional machine learning (ML) models as well.

Whether you are working with neural networks in deep learning or traditional machine learning algorithms like decision trees, linear regression, or support vector machines, you can convert these models to ONNX format. This ensures that models from different domains can be interoperability used and deployed across different platforms and environments.

Adaptability to Rapid Technological Advances

The machine learning and deep learning fields are continuously growing, with regular updates to frameworks such as TensorFlow, PyTorch, and Scikit-Learn. ONNX is designed to be flexible, tracking updates and changes in these frameworks and evolving accordingly.

As new features and improvements are introduced in machine learning frameworks, ONNX is also updated to incorporate these advancements. This ensures that ONNX remains relevant and compatible with the latest tools and libraries, allowing users to advantage of cutting-edge technology without being confined to a particular framework.

Compact and Cross-Platform Model

ONNX provides a compact and cross-platform representation for model serialization. This means that ONNX models can be easily saved, transferred, and loaded across different systems and platforms. The compact structure of ONNX files helps in reducing storage requirements and facilitating efficient model sharing.

For example, once you have an ONNX format, then that can be used across different environments, regardless of the underlying hardware or operating system. This cross-platform capability enhances the portability and usability of models, making it easier to deploy and integrate them into diverse applications.

Standardized List of Well-Defined Operators

ONNX uses a standardized list of well-defined operators informed by real-world usage. This means, ONNX defines a comprehensive set of operators that are commonly used in machine learning and deep learning tasks. These operators are carefully standardized and informed by practical use cases to ensure they cover a wide range of operations required for model execution.

ONNX - Model Zoo

The ONNX Model Zoo is a collection of pre-trained models in the ONNX (Open Neural Network Exchange) format, designed to easily use the machine learning models without the needing to train them from scratch.

Whether you're working with image classification, object detection, natural language processing, or other machine learning tasks, the ONNX Model Zoo provides a variety of models that are ready for inference with ONNX Runtime.

In this tutorial, we will learn about the the ONNX Model Zoo and its offerings across various domains such as computer vision, natural language processing (NLP), generative AI, and graph machine learning.

What is ONNX Model Zoo?

The ONNX Model Zoo is a repository of pre-trained models that are available for download and inference. These models are trained on large datasets and are provided in ONNX format, allowing you to use them across different frameworks and platforms without worrying about model conversion or compatibility.

The ONNX Model Zoo provides state-of-the-art models sourced from prominent open-source repositories like timm, torchvision, torch_hub, and transformers, offering developers and researchers access to pre-trained models that can be directly utilized in AI applications.

Accessing the ONNX Model Zoo

To access the ONNX Model Zoo −

- Visit the ONNX Model Zoo GitHub repository.

- Browse through available models such as MobileNet, ResNet, SqueezeNet, AlexNet, and many others.

- Download pre-trained ONNX models directly from the repository.

These models are ready to be used with ONNX Runtime, allowing you to quickly deploy solutions without needing to train models from scratch.

Key Features of ONNX Model Zoo

- Pre-trained Models: Access a wide range of models that are pre-trained on large datasets, saving time and computational resources.

- Interoperability: Leverage ONNX models across different frameworks like PyTorch, TensorFlow, and more, enhancing cross-platform deployment.

- Ready for Inference: The models are optimized for inference using ONNX Runtime, providing fast and efficient performance across devices and platforms.

- Git LFS: The ONNX Model Zoo files can be larger to handle those files, it uses the Git LFS (Large File Storage) and Git LFS command line for downloading multiple ONNX models.

Categories of ONNX Model Zoo

The ONNX Model Zoo offers models for a wide range of machine learning tasks. Here are the most common categories −

- Computer Vision

- Natural Language Processing (NLP)

- Generative AI

- Graph Machine Learning

Computer Vision

The ONNX Model Zoo offers an extensive set of models modified for computer vision tasks, including −

Image Classification Models

These models classify images into predefined categories. The ONNX Model Zoo provides popular pre-trained models such as −

- MobileNet: A lightweight deep neural network for mobile and embedded vision.

- ResNet: A CNN (up to 152 layers) using shortcut connections for image classification.

- SqueezeNet: A compact CNN model with 50x fewer parameters than AlexNet.

- VGG: A deep CNN with smaller filters, providing high accuracy.

- AlexNet: A classic deep CNN for classifying objects in images.

Object Detection & Image Segmentation

Detect and segment objects in images using models like −

- Tiny YOLOv2 and YOLOv3: Real-time object detection models capable of identifying multiple objects in an image.

- SSD (Single Stage Detector): A fast model for detecting objects in real time.

- Mask-RCNN: A network for instance segmentation, detecting objects and predicting their mask.

Body, Face, and Gesture Analysis

Models in this category are designed to detect and analyze human faces, emotions, and gestures −

- ArcFace: A face recognition model producing embeddings for facial images.

- UltraFace: A lightweight face detection model optimized for edge devices.

- Emotion FerPlus: Detects emotions from facial images.

- Age and Gender Classification: Predicts age and gender from images.

Image Manipulation

These models are designed to modify images through various transformations −

- CycleGAN: Translates images between domains without paired examples (e.g., turning a photo into a painting).

- Super Resolution: Upscales images to higher resolutions using sub-pixel convolution layers.

- Fast Neural Style Transfer: Applies artistic styles to images using a loss network.

Natural Language Processing (NLP)

For NLP tasks, the ONNX Model Zoo offers models for −

- Machine Translation: Translating text from one language to another.

- Machine Comprehension: Understanding and responding to natural language queries.

- Language Modeling: Predicting the likelihood of a sequence of words.

Generative AI

Generative models available in the ONNX Model Zoo include −

- Machine Translation: Translating text from one language to another.

- Machine Comprehension: Understanding and responding to natural language queries.

- Language Modeling: Predicting the likelihood of a sequence of words.

- Visual Question Answering: Combining image recognition and natural language understanding.

- Dialog Systems: Generating conversational responses based on input data.

Graph Machine Learning

Graph-based models are used in machine learning tasks where data is represented as graphs. These models are commonly used in applications like social network analysis, molecular biology, and more.

ONNX - Converting Libraries

ONNX (Open Neural Network Exchange) is an open-source format used for representing machine learning models, enabling the exchange of models between various frameworks. By converting models to ONNX, you can use a single runtime to deploy them, enhancing flexibility and portability across platforms.

In this tutorial, we will learn about the converting libraries in the ONNX, explore the available tools for different machine learning frameworks.

Introduction to Converting Libraries

A converting library is a tool that helps translate a model's logic from its original framework (like TensorFlow or scikit-learn) into the ONNX format. These libraries make sure that the converted model's predictions are either exactly the same or very close to the original model's predictions.

Without these converters, you would have to manually rewrite parts of the model, which can take a lot of time and effort.

Why Are Converting Libraries Important?

- Simplifies Model Conversion: Converting libraries automate the complex task of translating a machine learning model's prediction into ONNX format.

- Accuracy: These libraries are designed to maintain the accuracy of the model's predictions after conversion.

- Time-Saving: Manually implementing model parts in ONNX can be time taking. Converting libraries speeding up this process by handling most of the conversion automatically.

- Model Deployment Flexibility: Once converted to ONNX format, models can be run on a wide range of platforms and devices, making it easier to deploy them in production environments.

Available Converting Libraries

Different machine learning frameworks require different converting tools. Here are some commonly used libraries −

- sklearn-onnx Converts models from scikit-learn to ONNX format. If you have a scikit-learn model, this tool makes sure the model works well in the ONNX format.

- tensorflow-onnx This library converts models from TensorFlow to ONNX format. It simplifies the process of converting of deep learning models built using TensorFlow.

- onnxmltools This library converts models from various libraries, including LightGBM, XGBoost, PySpark, and LibSVM.

- torch.onnx It converts models from PyTorch to ONNX format. PyTorch users can convert their models for cross-platform deployment using ONNX runtime.

Common Challenges in Conversion

These libraries need to be updated frequently to match new versions of ONNX and the original frameworks they support. This can happen 35 times a year to keep things compatible.

- Framework-Specific Tools: Each converter is designed to work with a specific framework. For example, tensorflow-onnx works only with TensorFlow, and sklearn-onnx works only with scikit-learn.

- Custom Components: If your model has custom layers, you may need to write custom code to handle those during conversion. This can make the process more difficult.

- Non-Deep Learning Models: Converting models from libraries like scikit-learn can be tricky because they rely on external tools like NumPy or SciPy. You might need to manually add conversion logic for certain parts of the model.

Alternatives to Converting Libraries

An alternative to writing framework-specific converters is to use standard protocols that promote code re-usability across multiple libraries. One such protocol is the Array API standard, which standardizes array operations across several libraries like NumPy, JAX, PyTorch, and CuPy.

ndonnx

Supports execution with an ONNX backend and provides instant ONNX export for code compliant with the Array API. It is ideal for users looking to integrate ONNX export functionality with minimal custom code.

It reduces the need for framework-specific converters. Provides a simple, NumPy-like way to build ONNX models.