- AWS ElastiCache Tutorial

- Home

- Overview

- Environment

- Interfaces

- Launching Cluster

- Viewing Cluster Details

- Cluster Endpoints

- Accessing Cluster

- Modifying Cluster

- Rebooting Cluster

- Adding Nodes

- Removing Nodes

- Scaling the Clusters

- Delete Cluster

- Redis Shards

- Parameter Group

- Listing Parameters

- Deleting Parameters

- Engine Parameters

- Backup and Restore

- Monitoring Node - Metrics

- Memcached & Redis

- Accessing Memcached Cluster

- Lazy Loading

- Write Through

- Add TTL

- Memcached VPC

- Creating Memcached Cluster

- Connecting to Cluster in VPC

- Delete Memcached Cluster

- IAM policies

- SNS Notifications

- Events

- Managing Tags

- Managing Costs

- AWS ElastiCache - Resources

- Quick Guide

- Useful Resources

- Discussion

AWS ElastiCache - Lazy Loading

There are different ways to populate the cache and keep maintaining the cache. These different ways are known as caching strategies. A gaming site maintaining the leaderboard data needs a different strategy than the trending news display in a news website. In this chapter we will study about on such strategy known as Lazy Loading.

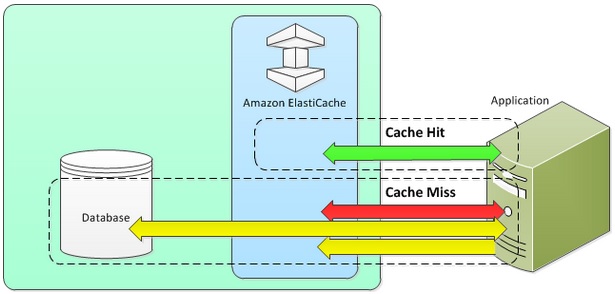

When data is requested by the application, the request searches for data in the cache of ElastiCache. There are two possibilities. Either the data exists in the cache or it does not. Accordingly we classify the situation in to following two categories.

Cache Hit

The application requests the data from Cache.

The cache query finds that updated data is available in the cache.

The result is returned to the requesting application.

Cache Miss

The application requests the data from Cache.

The cache query finds that updated data is not available in the cache.

A null is returned by cache query to the requesting application.

Now the application requests the data directly form the database and receives it.

The requesting application then updates the cache with the new data it received directly from the database.

Next time the same data is requested, it will fall into cache hit scenario above.

The above scenarios can be generally depicted by the below diagram.

Advantages of Lazy Loading

Only requested data is cached − Since most data is never requested, lazy loading avoids filling up the cache with data that isn't requested.

Node failures are not fatal − When a node fails and is replaced by a new, empty node the application continues to function, though with increased latency. As requests are made to the new node each cache miss results in a query of the database and adding the data copy to the cache so that subsequent requests are retrieved from the cache.

Disadvantages of Lazy Loading

Cache miss penalty − Each cache miss results in 3 trips. One, Initial request for data from the cache, two, query of the database for the data and finally writing the data to the cache. This can cause a noticeable delay in data getting to the application.

Stale data − If data is only written to the cache when there is a cache miss, data in the cache can become stale since there are no updates to the cache when data is changed in the database. This issue is addressed by the Write Through and Adding TTL strategies, which we will see in the next chapters.